NYC Mesh OSPF Routing Methodology

Positives and negatives

OSPF is an interesting choice as an in-neighborhood routing protocol because of its ease of setup (auto convergence, no ASNs), and how ubiquitous it is -- nearly every cheap and expensive commercial and open device supports it. These two positives alone make OSPF worth considering.

On the down-side, it is not specifically designed for an adhoc mesh, it trusts blindly, and has very few tuneables. Additionally, there are a few technical challenges such as the lack of link-local address use, only advertising connected networks (not summaries), and some common defaults on various platforms.

Many of these challenges can be overcome by taking some care to make good choices for options when setting up a network.

OSPF Selection

NYC Mesh has chosen to use OSPF as the standard mesh routing protocol of choice. This may be a controversial choice, as _most_ mesh networks in Europe are using custom mesh routing protocols, or encrypted routing protocols. We have chosen this path because:

- OSPF is an open-standard with implementations on many platforms, open and closed, including cheap older professional switches

- OSPF hugely reduces the burden for installers and members to maintain the network

- OSPF cooperates well with other protocols such as BGP

- Other Mesh networks (CTWUG in South Africa for example) have scaled OSPF to 1000+ routers.

Ok, but why OSPF for NYC Mesh?

NYC Mesh utilizes a wide range of hardware with differing capacities and weather resiliency characteristics. Being volunteer-driven and operated, its important that the network be resilient, but also easy to maintain and scale. OSPFv2 Point-to-Multipoint allows us to modify routing tables and plan for expansion without overly-complicated configuration planning.

To standardize across the network, each router has a Mesh Bridge Interface on the OSPF Area with default cost of 10 to all adjacent neighbors. This ensures symmetry in link costs on both ends of the link, keeping bi-directional traffic following the same path. For each "hop" to an internet exit, each router incurs its link cost to transit to the next hop. By calculating the lowest cost to an internet exit, the local router sends its traffic on the lowest absolute-cost path to an Internet exit.

Example: Node path to internet exit with all default costs

Node A > 10 > Hub > 10 > Supernode > 1 > Public Internet

In the above example, the Node incurs cost 21 to exit to the Public Internet. Absent a lower-cost link becoming available, this will be the route for all internet traffic to and from Node A.

Now that we've standardized route costs, we need to design priority and redundancy to take advantage of nodes clustered around each other while preferring higher-capacity links.

The WDS bridge: ensuring Hub-and-spoke routes are preferred over WDS routes

NYC Mesh uses Omnitik wireless routers at almost all member nodes to automatically connect to each other, providing numerous backup routes in case of hardware failure or network changes, but these connections are often slower and less reliable than point-to-point and point-to-multipoint connections in our Hub-and-Spoke model. To account for this, we put the Omnitik<>Omnitik WDS links on a separate "WDS Bridge" on every Omnitik router with default cost of 100.

Example: Node preferring Mesh Bridge over "shorter" WDS links

Node A > 100 (WDS) > Node B > 10 > Supernode X > 1 > Public Internet

Node A > 10 > Microhub > 10 > Hub > 10 > Supernode Y > 1 > Public Internet

In this example, Node A prefers to exit via Supernode Y as the cost it incurs is 31, versus 111 via Supernode X. If we did not have higher WDS costs, Node A would instead prefer the shorter link to Supernode X, but would very likely experience poorer performance.

For more details on the hybrid Hub-and-Spoke + Mesh model we deploy, see the Mesh page.

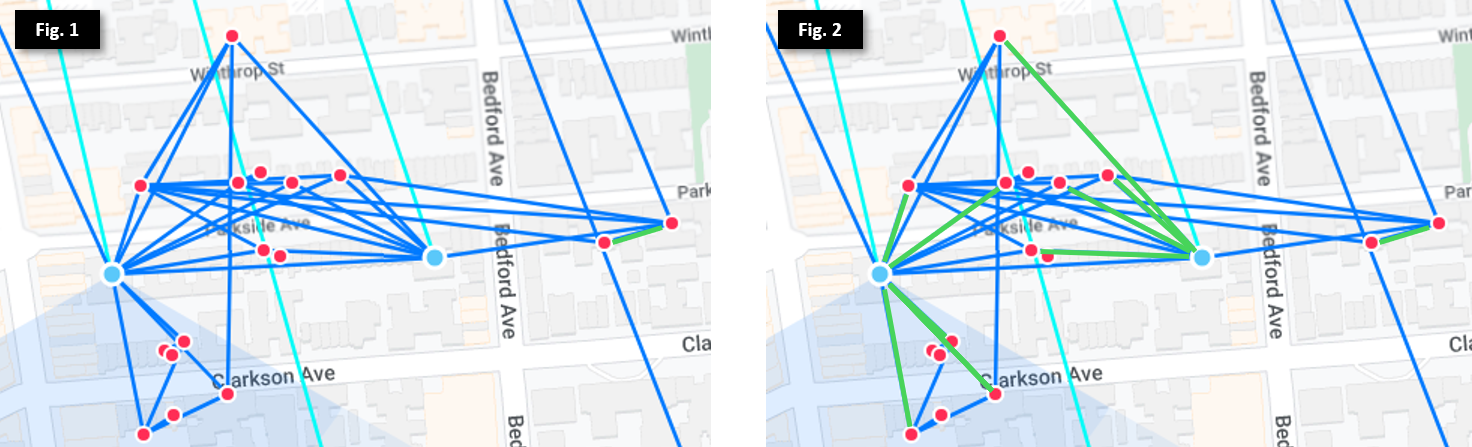

Example: Prospect Lefferts Garden

In Figure 1, we see many nodes (marked as red dots) clustered around 2 Microhubs (marked as blue dots) in Prospect Lefferts Garden, as well as multiple exit routes to the north. While most of the nodes will automatically find the best exit, there are some that may have equal costs through multiple exits. To mitigate this, we set preferred routes (via hardware like SXTs, or software with virtual wireless interfaces) on the Mesh Bridge, as illustrated by the green lines in Figure 2. This ensures each node selects its fastest and most stable route to send and receive internet traffic.

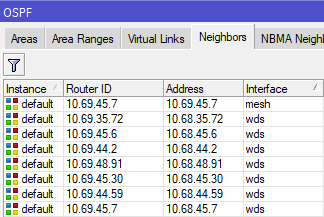

We can see this in action on the Omnitik: 10.69.45.7 is on the the "Mesh" bridge interface, meaning it incurs cost 10 to transit. All other adjacent routers are on the "WDS" bridge interface, and incur cost 100 to transit. This setup ensures the local node prefers the 4507 Microhub as its exit route, but also has backup routes in case 4507 goes offline or one of its upstream links is broken.

By implementing this architecture across all routers on the network, we now have high resiliency to outages, scalability, and minimal configuration effort.

Scaling out the Hub-and-Spoke model

This baseline architecture works great in individual neighborhoods and on relatively linear routes, but with over a thousand nodes connecting to 60+ Hubs with links crisscrossing New York City, some planning and manual intervention is required to ensure stability and speed for all connected members.

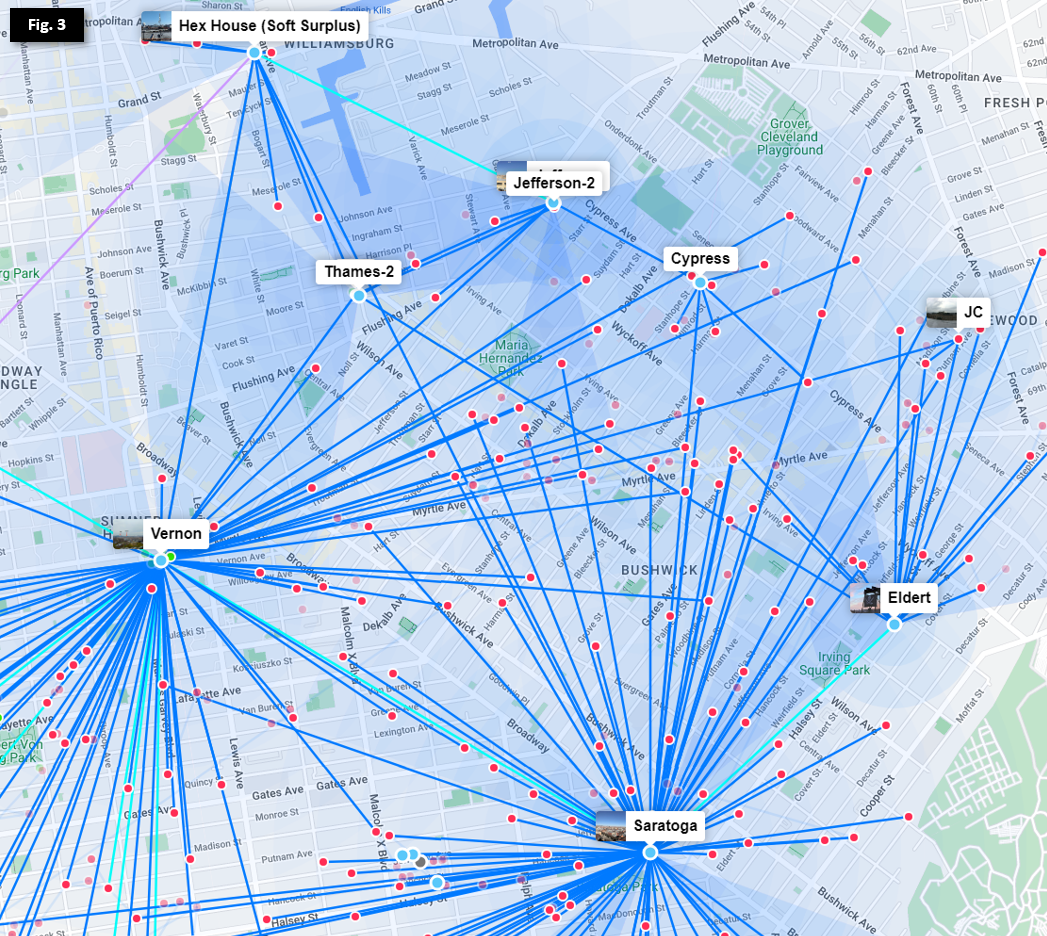

In the Bed-Stuy, Bushwick, Ridgewood, and Crown Heights neighborhoods show above in Figure 3, we have over a dozen Hubs serving hundreds of members. Efficient routing and redundancy across multiple wireless links requires further options for route cost between 10 and 100.

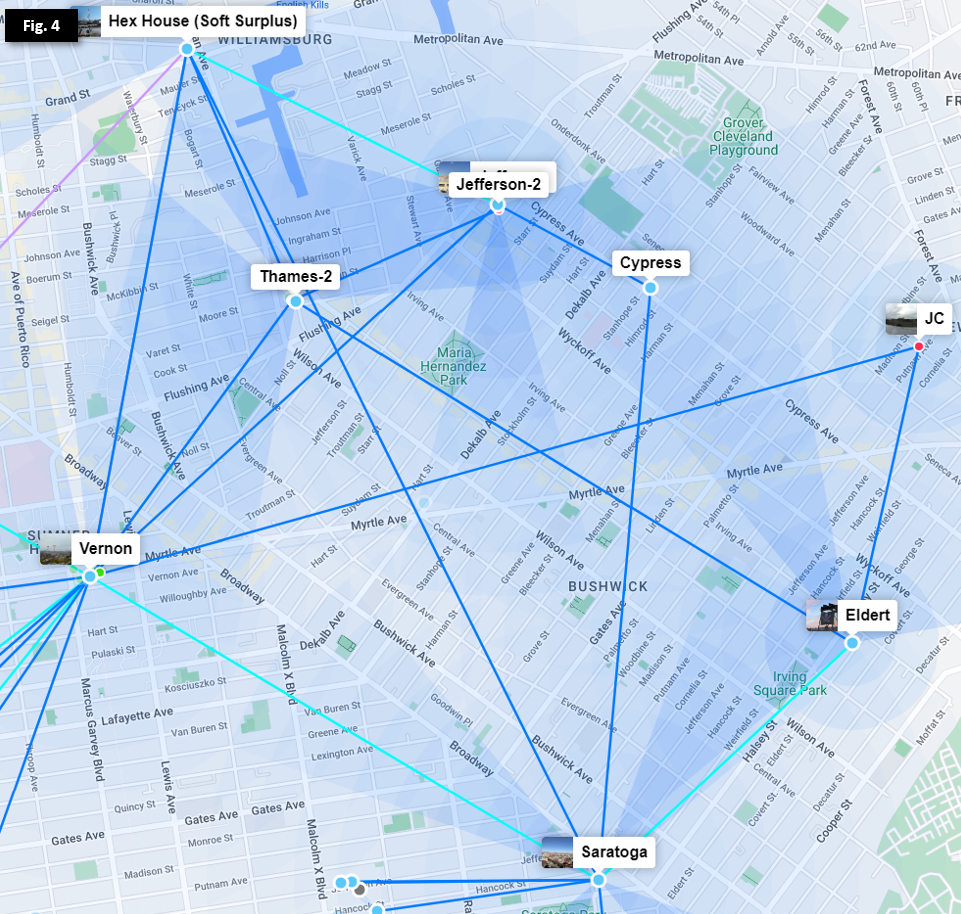

In efforts to minimize single points of failure in our network (hubs having only 1 exit route) and provide dedicated backup routes in cases of weather impacting high-frequency links, we deploy redundant links in a "triangle scheme" so that each hub has multiple low-cost routes to exit. To see this deployed, let's remove the nodes from the above photo and focus on the Hubs.

As we can see in Figure 4, most Hubs have 2 or more exit routes so that an outage of an individual link or Hub will not isolate any other Hub. Additional routes leading off Figure 4 allow multiple exits from both Vernon and Hex House, as well as other lower-capacity links through smaller Microhubs and nodes.

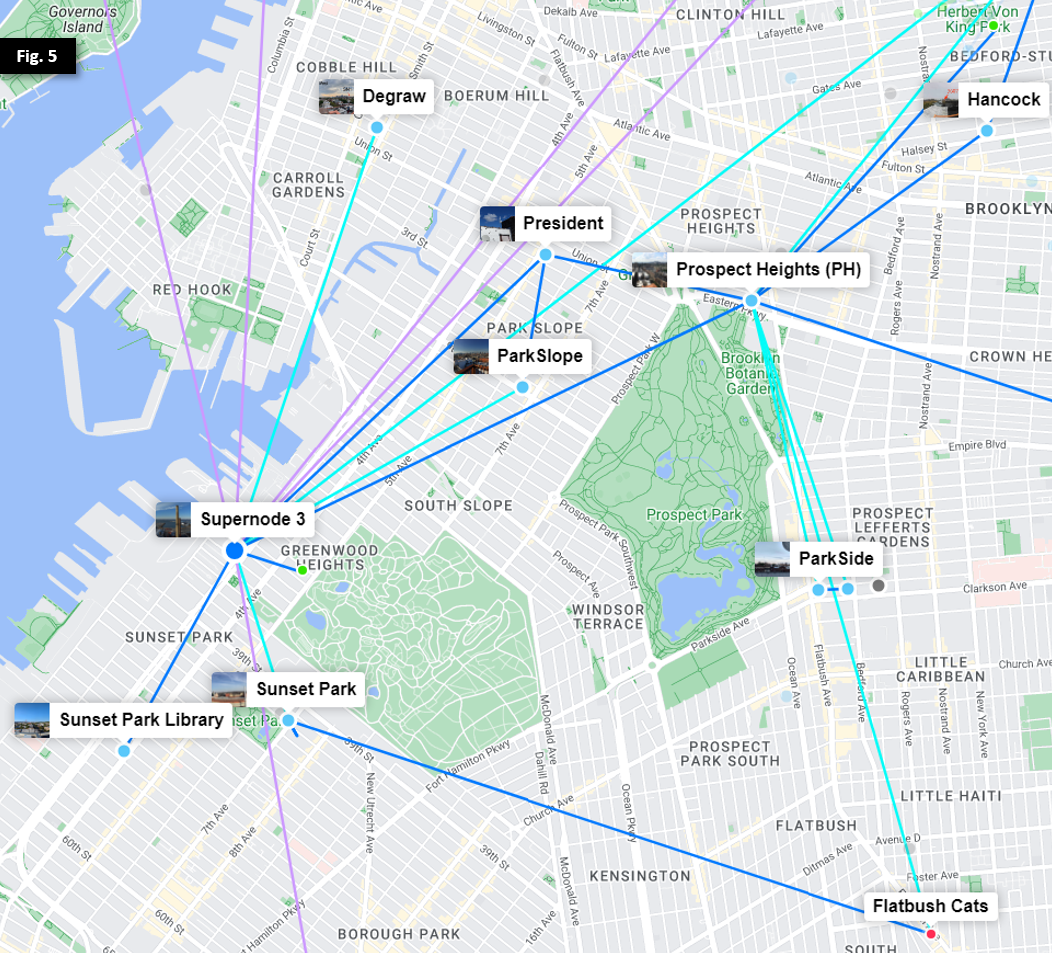

In Figure 5, we observe a similar trend as we move southwest towards Prospect Park and Supernode 3 at Industry City.

Sidebar: The case against OSPF automation and summarization

Given the scale of the problem and continued growth, we must consider an important question: why not implement automation to dynamically adjust OSPF costs based on link quality, and/or utilize summarization and redistribution to simplify planning?

As mentioned above, our network design is meant in part to balance the following three goals:

- Resiliency across a widely distributed network in a dense urban environment

- Simplicity of configuration and maintenance by a 100% volunteer team of architects, engineers, coders and enthusiasts

- Scalability for future expansion

Further, NYC Mesh has no CEO, directors, or employees, and the board intentionally does not have decision-making authority over non-financial/legal matters; as documented in the NYC Mesh Commons License, the design, planning, maintenance and support of NYC Mesh is done solely by community members and volunteers. While we do have highly-skilled volunteer network engineers, the day-to-day maintenance and monitoring of the network is done by members with varied skill levels; we generally prefer easy-to-maintain solutions over highly customized configurations requiring extensive knowledge and training.

Finally, as we primarily rely on member donations to maintain and expand the network, we generally avoid high-end enterprise-grade hardware or software requiring recurring subscription fees and support contracts to minimize operational expenses. As NYC Mesh continues to grow, we may need to adopt more robust and dynamic routing and load-balancing techniques, and will look to our community to collectively decide on the path forward.

Tying it all together: Load-balancing across varied hardware

NYC Mesh uses a broad range of purchased and donated Ubiquiti and Mikrotik hardware with varying capacity, capabilities, and rain fade resilience, and members are allowed to extend the network at will pursuant to the Network Commons License. Because our OSPF link costs are static and do not automatically increase or decrease based on link quality, limiting ourselves to just two options for link cost will quickly cause issues as the network grows. Here are just a few use cases to consider:

- Avoiding unintentional bridging of Hubs with low-capacity connections as members join and add equipment and links

- Intentional design of secondary and tertiary routes for major Hubs to mitigate rain fade and hardware failure

- Multi-antenna routes with differing performance characteristics (primarily high-capacity 60GHz links with dedicated 5GHz backup hardware)

- Minimizing impacts from misconfigured DIY and new infrastructure installations

To meet these goals, we need to set up custom link costs on backup routes as well as between high-traffic Hubs.

Example: Microhubs between Major Hubs

Our Vernon and Prospect Heights Hubs collectively carry more than 80% of NYC Mesh network traffic in Brooklyn. By design, each Hub's primary exit is through different Supernodes to the public Internet (Vernon through Supernode 10 in Manhattan, and Prospect Heights through Supernode 3 in Industry City). To allow redundancy between their exits, a dedicated 60GHz link (in teal) is deployed between the two, but Vernon and Prospect Heights also have more preferrable secondary links (illustrated further below in Figure 7). This requires the link to have a slightly higher cost (in this case, 15) so that each Hub prefers other backup routes in case of primary exit link outages.

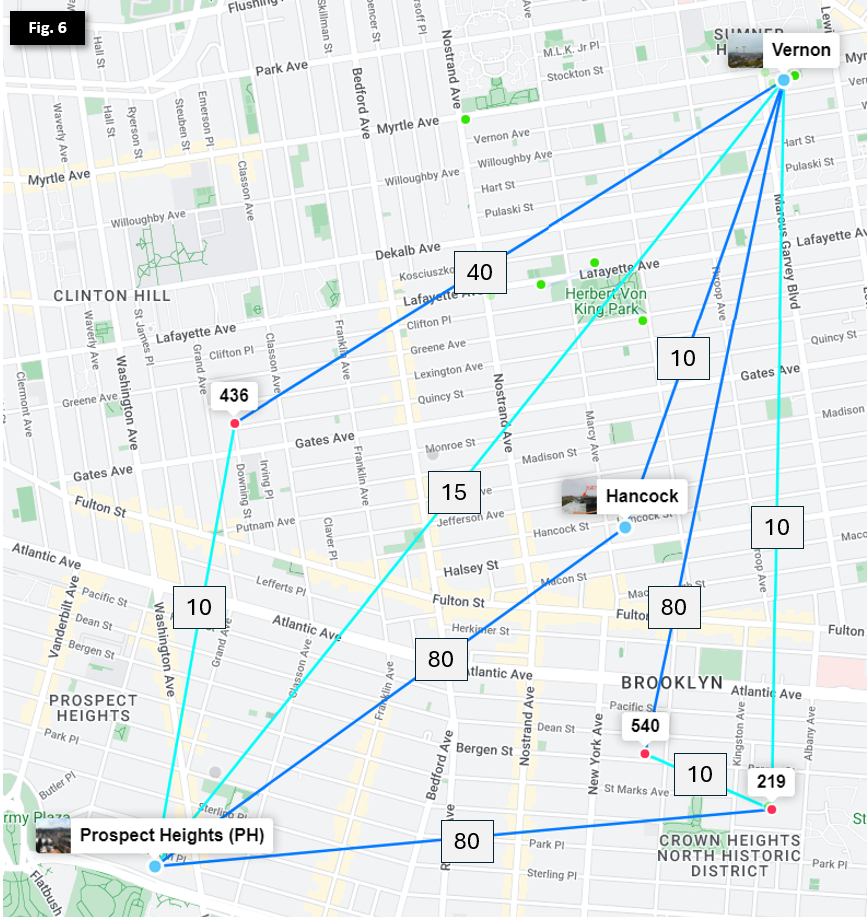

To make matters more complicated, Microhubs in between Vernon and Prospect Heights connect to both Hubs to provide their own redundancy, as illustrated in Figure 6.

Note: nodes and sector coverage have been omitted

Note: nodes and sector coverage have been omitted

To ensure each Microhub prefers the fastest route and they don't bridge Vernon and Prospect Heights by having additive link costs lower than the Vernon <> Prospect Heights 60GHz link, we need to manually set the backup links with higher costs.

- 436

- Primary: Prospect Heights via AF60LR PtMP (default 10 on Mesh Bridge)

- Secondary: Vernon via Litebeam 5ac PtMP (manual 40 on OSPF area)

- Hancock 3607

- Primary: Vernon via Litebeam 5ac PtP (default 10 on Mesh Bridge)

- Secondary: Prospect Heights via Litebeam LR PtMP (manual 80 on OSPF area)

- St Marks 219

- Primary: Vernon via AF60LR PtMP (default 10 on Mesh Bridge)

- Seconday: Prospect Heights via Litebeam LR PtMP (manual 80 on OSPF area)

- 540

- Primary: St Marks 219 via LHG60 PtMP (default 10 on Mesh Bridge)

- Secondary: Vernon via Litebeam 5ac PtMP (manual 80 on OSPF area)

Determining link costs

Note that there is no firm methodology or formula for calculating optimal custom link costs in this model, though backup links are generally set between 20 and 80 depending on upstream impacts. Sufficient buffer is allocated between primary and secondary routes to allow expansion and updates with minimal changes required to upstream OSPF costs or routes. When selecting a custom link cost that may bridge segments of the network, the following factors should be taken into account:

- What is the preferred exit path for the local router?

- This will normally be the link with the highest capacity (60 GHz), and will usually have default cost 10 on the Mesh Bridge to keep configuration simple

- Will the backup link connect to the same Hub as the primary, or a different one?

- When the primary and secondary/backup links connect to the same upstream Hub, it's generally safe to set the backup link to cost 20 without further impacts

- What are the current primary and secondary exit routes & costs for each upstream Hub?

- This gives us an understanding of where traffic will route along each hop of the network

- In the event of a primary link failing on an upstream Hub, will the new bridged link take priority over an existing secondary route?

- Unless the local router is intended to override an existing upstream Hub's secondary/backup route, this defines the minimum cost of the secondary link: the primary link cost + secondary link cost + exit cost after the secondary link should be greater than the existing exit cost at the preferred Hub. This ensures that if the upstream Hub's primary route is interrupted, it will continue to use its existing preferred backup route.

- (Optional) For the local router, is the upstream Hub's secondary exit preferred over the local router's secondary route?

- In some cases, the secondary route of the upstream Hub may have bandwidth constraints or other limitations that make the local router's backup more preferrable in the event that the upstream Hub's primary exit is interrupted. In this case, the total cost of the local backup exit route should be less than the local primary link cost + the upstream Hub's secondary exit cost, but still high enough to not cause the upstream Hub to prefer the new bridged link.

- Testing this scenario can be challenging in production environments without actively disabling preferred links; a speed test from the local router to the second-order upstream backup router is usually sufficient for planning purposes.

That's a lot of factors to consider! Let's see what this looks like in the real world.

Example: Determining Primary, Secondary, and Tertiary costs

Note: some additional links and hubs omitted for clarity; listed link speeds are production actuals; distances between Hubs are not to scale

Note: some additional links and hubs omitted for clarity; listed link speeds are production actuals; distances between Hubs are not to scale

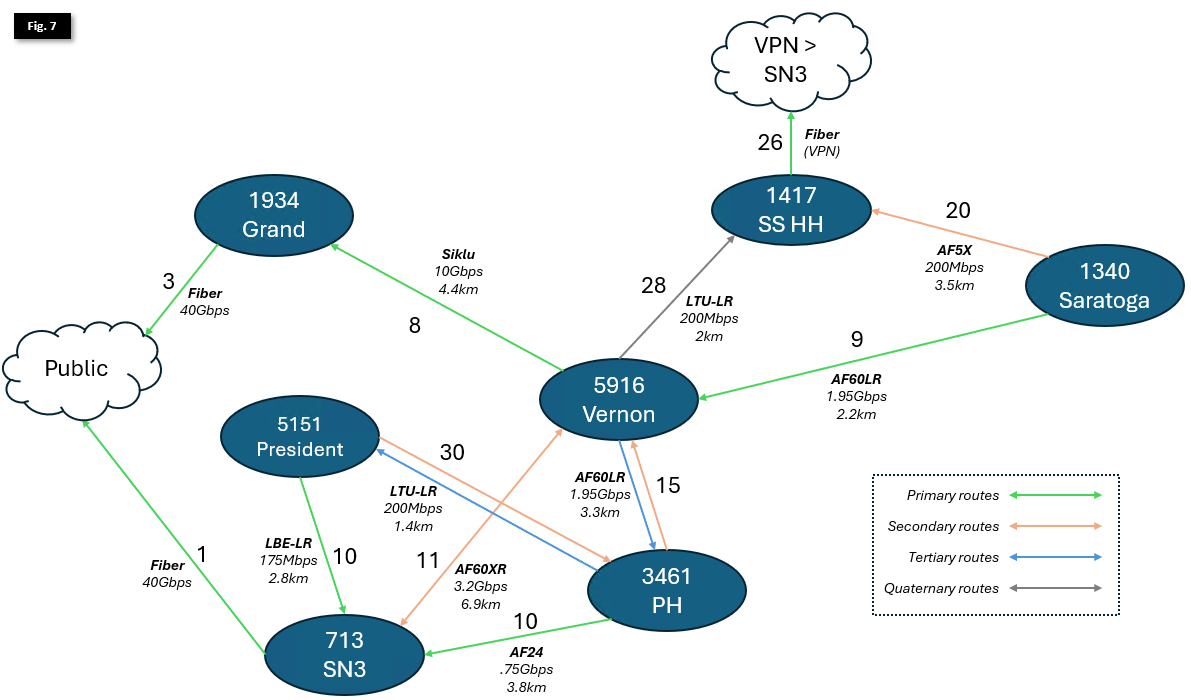

Figure 7 illustrates primary, secondary, and tertiary routes for larger Hubs in Brooklyn.

- All link costs are symmetrical to ensure routing is consistent for download/upload to the Public Internet

- Links are marked with directional arrows to indicate Public Internet exit paths

- Bidirectional costs, link capacity, and physical distance are noted on the links

As we can see, deciding on primary routes depends on both capacity of individual links, as well link distance (for rain resiliency) and count of hops (for latency) to an internet exit.

Let's start by identifying the internet exits:

- Both 713 - Supernode 3, and 1934 Grand St. have 40Gbps fiber uplinks to the Public Internet. While they carry more traffic than indicated in this diagram, there is enough capacity in each of these links to comfortably carry all NYC Mesh traffic if necessary. SN3 has cost 1 to exit, and Grand has cost 3.

- 1417 - Hex House (Soft Surplus) serves a number of nodes and small Microhubs, and leverages a Wireguard VPN connection over consumer fiber internet to connect to SN3. It also serves as a backup route for Vernon and Saratoga. To ensure it doesn't take too much traffic, it has a total cost of 26+1=27 to exit through SN3.

Next, let's look at 1340 - Saratoga

- This hub serves a large amount of nodes and Microhubs, carrying 1-400Mbps traffic at any given moment.

- Its primary exit is through a 1.95Gbps AF60LR to Vernon, with total exit cost of 9+8+3=20 via Grand.

- This 2.2km 60GHz link occasionally experiences interruptions in heavy rain and snow.

- Its secondary exit is a 200Mbps AF5X to Hex House, with a total exit cost of 20+26+1=47.

- Although this 3.5km 5GHz link does not have enough capacity to consistently carry all local traffic, it is extremely resilient to weather impacts, making it preferrable over a high-frequency, high-capacity antenna.

Moving south now to 3461 - Prospect Heights:

- Similar to Saratoga, this hub serves a large amount of nodes, Microhubs and Hubs, carrying 2-500Mbps traffic at any given moment

- Its primary exit is through a 750Mbps AF24 to SN3, with total exit cost of 10+1=11 via SN3.

- While this 3.8km 24GHz link has lower capacity than a similar 60GHz model, it has much better weather resiliency and experiences only a few minutes of downtime per year

- Its secondary exit is a 1.95Gbps AF60LR to Vernon, with total exit cost of 15+8+3=26 via Grand.

- This 3.3km 60GHz link is less resilient to weather, and is intended only as a failover in case the AF24 link goes down due to hardware malfunction or SN3 outage.

- Its tertiary exit is a 200Mbps LTU-LR to President - 5151, with total exit cost of 30+10+1=41 via SN3.

- Similar to Saratoga's secondary route, this 1.4km 5GHz link is extremely resilient to weather impacts.

To the West we look at 5151- President

- While this hub serves 15-20 members, normally only carrying ~25-100Mbps in traffic, it's location and height make it worthwhile to include in our analysis as it serves as a backup to multiple Hubs.

- Its primary exit is through a 175Mbps Litebeam LR to SN3, with total exit cost of 10+1=11.

- This 2.8km 5GHz link is extremely resilient to weather impacts, and given the smaller footprint and bandwidth requirements of this Hub, is preferred over 60GHz hardware.

- Its secondary exit is a 200Mbps LTU-LR to Prospect Heights, with total exit cost of 30+10+1=41 via SN3.

- It also has a tertiary 5GHz 125Mbps exit through 1635 - Park Slope (not shown in this diagram), and is secondary exit for that Hub.

Finally, let's look at 5916 - Vernon

- The Vernon Hub is our largest and most heavily trafficked in Brooklyn, serving a very large number of nodes, dozens of Microhubs, and many Hubs as a primary exit to the Public Internet. Its location and height advantage over nearby neighborhoods make it a critical backbone of the Mesh. It typically carries 600-1200Mbps of traffic.

- Its primary exit is through a 10Gbps SIklu EtherHaul to Grand, with total exit cost of 8+3=11.

- This 4.4km licensed 70GHz link is fairly resilient to rain and snow, but does occasionally experience service degradation and interruptions in heavy precipitation.

- Its secondary exit is through a 3.2Gbps AF60XR link to SN3, with total exit cost of 11+1=12.

- Similar to Prospect Heights' secondary, this 6.9km 60GHz link, the longest in NYC Mesh production use, has poor weather resilience, and is intended only as a failover in case the Siklu link goes down due to hardware malfunction or Grand outage.

- Its tertiary exit is the 1.95Gbps AF60LR to Prospect Heights, with total exit cost of 15+10+1=26 via SN3.

- Similar to the secondary exit, this 60GHz 3.3km link is only intended as a bidirectional failover in case of multiple hardware failures and/or Supernode outages.

- Its quaternary exit is a 200Mbps LTU-LR to Hex House, with total exit cost of 28+26+1=55 via SN3.

- Although this 2km 5GHz link does not have enough capacity to consistently carry all local traffic, similar to Saratoga's secondary link, it is extremely resilient to weather impacts and provides an exit in cases of especially severe weather interrupting all other higher-capacity links.

Last topic - Outage Planning

Ok, to summarize, we've done the following:

- Selected OSPF for simplicity and consistency across the network

- Defined default link costs for primary and WDS links across nodes and Hubs

- Established "triangles" for higher-capacity Microhubs serving multiple members, and adjusted costs for these links to ensure we don't bridge major Hubs

- Created secondary, tertiary, and even quaternary links for major Hubs and geographically-advantageous locations to ensure failover exits

How did that all come together, and how do we select the right balance of capacity and weather resilience? Let's look at Figure 7 one more time.

[last portion outstanding - 10 Apr 24]