Networking

- Overview

- 10-69-Net-Network

- BGP

- Birdc

- Networking 101 Training Classes

- DNS

- Hubs

- IP Mapping Method

- Mesh

- NTP

- Peering

- Supernode-Architecture

- Vpn

- VPN - L2TP/IPsec

- VPN - L2TP/IPsec Request

- VPN - WireGuard

- OSPF

- NYC Mesh OSPF Routing Methodology

- Rules and Standards

- Point-to-Point Configuration

- Juniper Point-to-Point Guide

- 10 Gigabit

- Brocade Router CLI Notes

Overview

NYC Mesh is a mesh network.

To understand a bit more about our Mesh network design concepts, please see Mesh Design

For more information on our networking concepts at a high level, please see:

We use the following routing protocols:

- BGP: Hub-to-Hub, some Rooftops, and to the Internet

- OSPF: Hubs to Rooftops, Rooftops to Rooftops, and in some VPNs

- BMX6: Older Openwrt Nodes (c. 2015), (Write-up pending)

You can connect to the network through:

- A wireless connection to an existing hub or node. See Nodes or the Map to locate one near you.

- VPN to the Mesh. See VPN

10-69-Net-Network

The 10-69 network is used to connect nodes on NYC Mesh. Every mesh router at every node on the mesh gets an IP address from 10.69.0.0/16. Each router's IP address can be computed from the router's node number. The 10-69 network supports up to two routers per node.

The 10-69 network allows for routers at nodes to auto-discover each other using OSPF with minimal configuration. This makes it possible for anyone to join the mesh with almost no intervention from other mesh members. All you need to do is request a node number. No IP addresses, subnets or VLANs have to be statically allocated, configuration can be auto-generated from your node number, and nobody has to log in to any routers at neighboring nodes to update their configuration. As long as you have line of sight to another node and configure your router to use OSPF on the 10-69 network, you can join the mesh.

Each mesh router bridges its wired and wireless interfaces together into a single interface. The 10-69 network is link-local to this bridge. The bridge's IP address is the router's 10-69 address, and every router sees 10.69.0.0/16 as directly connected in its routing table. Because the 10-69 network is link-local, you will never see 10.69.0.0/16 in the OSPF routing table. Instead, you will see a /32 (a route to a single host) for every router that's online.

All routers connected to the bridge are isolated from each other at layer 2. They can only talk to the router hosting the bridge. This is equivalent to a port-isolated switch with the host router on the uplink port. Without port isolation, the mesh would become a huge layer 2 broadcast domain: every router's bridge would be directly connected to every other router's bridge.

Port isolation also makes sure that the topology of the layer 2 network mirrors the physical layer's topology. A bridge without port isolation is a broadcast domain, but the mesh is primarily made up of point-to-point and point-to-multipoint radio links. If routers A and B connect wirelessly to router C's bridge, we want OSPF adjacencies for A-C and B-C, but not A-B. This is because routers A and B are not actually neighbors – all traffic between A and B has to flow through C.

All OSPF interfaces that use the 10-69 network are configured in point-to-multipoint mode. Like broadcast interfaces, PtMP interfaces can automatically discover their neighbors, so OSPF neighbors don't have to be set statically in the router's configuration. Unlike broadcast interfaces, RFC 2328 does not specify how OSPF should perform auto-discovery on PtMP interfaces. The mesh assumes that auto-discovery will be performed using the AllSPFRouters multicast IP address (224.0.0.5), but not all OSPF implementations support this. For PtMP interfaces, OSPF advertises a /32 to its address on that interface.

The ability to easily compute IP address from node number is also useful for troubleshooting: if you need to see if a node is reachable, convert its node number into its first router's 10-69 address and ping it.

In addition to the 10-69 network, a /26 for users of your node can be computed from your node number. The standard OmniTik config will hand these addresses out using DHCP. These addresses are computed linearly starting at 10.96.0.0/26 (followed by 10.96.0.64/26, 10.96.0.128/26, etc.).

Generating an IP address

The first router at each node can auto-generate its own 10-69 IP address using the following method:

The router's address will take the form of 10.69.X.Y. Take the node number and split it after the second digit. For example, node number 12345 becomes 123 and 45. The digits on the left, in this case 123, are bound to X, and the digits on the right are bound to Y, giving us 10.69.123.45.

Y can be between one and two digits, so it can't be greater than 99. X can in theory be any number of digits, but each number in an IPv4 address can only range from 0 to 255, so X can't be greater than 255. This means that the highest node number possible in this addressing scheme is 25,599.

For example:

| Node Number | X | Y | 10-69-net Address |

|---|---|---|---|

| 5 | 0 | 5 | 10.69.0.5 |

| 50 | 0 | 50 | 10.69.0.50 |

| 500 | 5 | 00 | 10.69.5.0 |

| 5000 | 50 | 00 | 10.69.50.0 |

| 50000 | X | Y | Not Possible |

| 25599 | 255 | 99 | 10.69.255.99 |

Adding a second router

Most of the time, one router per node is enough, but sometimes it's useful to add a second router.

To generate an address for a second router, just add 100 to Y. Going back to the previous example, the address of the second router at node 12345 would be 10.69.123.145.

For example:

| Node Number | X | Y | First router | Second Router |

|---|---|---|---|---|

| 5 | 0 | 5 | 10.69.0.5 | 10.69.0.105 |

| 50 | 0 | 50 | 10.69.0.50 | 10.69.0.150 |

| 500 | 5 | 00 | 10.69.5.0 | 10.69.5.100 |

| 5000 | 50 | 00 | 10.69.50.0 | 10.69.50.100 |

| 50000 | X | Y | Not Possible | Not Possible |

| 25599 | 255 | 99 | 10.69.255.99 | 10.69.255.199 |

BGP

The Border Gateway Protocol (BGP) is an inter-Autonomous System routing protocol.

NYC Mesh no longer uses BGP within the mesh between neighbors / members. Use of BGP within the mesh was too static for the changing network, lacked some "automatic" properties, and made it difficult to train new people.

Use outside NYC Mesh

BGP is a popular dynamic routing protocol as it is relatively simple to configure, scales well and enjoys support across multiple hardware and software vendors. The internet uses BGP to interconnect all the thousands of companies, ISPs, and Exchanges that make up the internet.

Use within NYC Mesh

NYC Mesh uses BGP to connect to the internet at Supernodes. In fact .. it is a requirement of a Supernode.

With BGP, we are able to connect our "Autonomous System" ( our organization's ID ) with other organizations, such as ISPs, and participate in the internet. By doing this, we carry our organization's IP addresses along with us and create many redundant connections to the internet.

Supernodes require BGP to connect to the internet because NYC Mesh has dedicated IP Addresses for use by our organization. In order to maintain proper functionality with the internet, we need to ensure we maintain a BGP connection at critical internet connection points, or, Supernodes.

Old Instructions

It is still possible to connect a BGP-only speaking device to the mesh, please coordinate with the larger group to do so, as it requires some planning.

Expand to see some older BGP information that is kept for informational purposes and the eventuality that BGP is needed for some devices

Expand to view details...

Communities

BGP communities can be used to classify routes that are imported or exported by an AS. Some definitions generally agreed upon by BGP speakers within the mesh are listed below. They are primarily used for interpreting the "quality" of various routes to the internet.

| Community | Meaning | Suggested interpretation |

|---|---|---|

| 65000:1001 | Internet connected by NYC Mesh | Set local preference to 130 |

| 65000:1002 | Internet connected by a fast, neutral 3rd party | Set local preference to 110 |

| 65000:1003 | Internet connected by a fast, non-neutral 3rd party | Set local preference to 100 |

| 65000:1004 | Internet connected by a slow, non-neutral 3rd party | Set local preference to 90 |

| 65000:1005 | Internet connected by a slow, NATed or possibly compromised 3rd party | Set local preference to 80 |

Prefix lists

IPv4 and IPv6 prefix lists that BGP speakers within the mesh commonly filter on (for import and export) are listed below:

IPv4

| Prefix (Bird notation) | Action |

|---|---|

| 199.167.59.0/24{24,32} | Allow |

| 10.0.0.0/8{22,32} | Allow |

| 0.0.0.0/0 | Allow |

| All others | Deny |

IPv6

| Prefix (Bird notation) | Action |

|---|---|

| 2620:12d:400d::/48{48,64} | Allow |

| fdff:1508:6410::/48{64,128} | Allow |

| ::/0 | Allow |

| All others | Deny |

How to get an ASN or IP allocation

Currently the mesh uses a spreadsheet to keep track of allocated resources. The process will be automated soon, but in the mean time please contact an existing member via Slack or email to have them help you acquire an ASN and IPv4 and/or IPv6 resources.

Examples

Some configuration examples for BGP implementations known to be in use within NYC Mesh today are listed below in no particular order.

Bird

Bird is an open source routing daemon with support for a number of different routing protocols including BGP.

**Expand Bird Example**

``` log stderr all;

router id 10.70.x.1;

function is_mesh_prefix_v4 () { return net ~ [ 199.167.59.0/24{24,32}, 10.0.0.0/8{22,32}, 0.0.0.0/0 ]; }

function is_mesh_prefix_v6 { return net ~ [ 2620:12d:400d::/48{48,64}, fdff:1508:6410::/48{64,128}, ::/0 ]; }

function set_local_pref () { if (65000,1001) ~ bgp_community then bgp_local_pref = 130; if (65000,1002) ~ bgp_community then bgp_local_pref = 110; if (65000,1003) ~ bgp_community then bgp_local_pref = 100; if (65000,1004) ~ bgp_community then bgp_local_pref = 90; if (65000,1005) ~ bgp_community then bgp_local_pref = 80; }

filter is_not_deviceroute { if source = RTS_DEVICE then reject; accept; }

filter mesh_import_v4 { if ! is_mesh_prefix_v4() then reject; set_local_pref(); accept; }

filter mesh_export_v4 { if ! is_mesh_prefix_v4() then reject; if ifname = "eth0" then bgp_community.add((65000,1005)); accept; }

filter mesh_import_v6 { if ! is_mesh_prefix_v6() then reject; set_local_pref(); accept; }

filter mesh_export_v6 { if ! is_mesh_prefix_v6() then reject; if ifname = "eth0" then bgp_community.add((65000,1005)); accept; }

protocol device { scan time 10; }

protocol direct { ipv4; interface "br0" "eth0"; }

protocol kernel { scan time 10; ipv4 { export filter is_not_deviceroute; }; }

protocol kernel { scan time 10; ipv6 { export filter is_not_deviceroute; }; }

template bgp meshpeer { local 10.70.x.1 as 65xxx; hold time 15; keepalive time 5; ipv4 { next hop self; import filter mesh_import_v4; export filter mesh_export_v4; }; ipv6 { next hop self; import filter mesh_import_v6; export filter mesh_export_v6; }; }

protocol bgp n1234 from meshpeer { neighbor 10.70.x.y as 65yyy; }

</details>

### [UBNT/EdgeOS](https://www.ubnt.com/products/#edgemax)

UBNT's EdgeOS was forked from Vyatta, which in turn borrows from [Quagga](https://www.nongnu.org/quagga/).

<details>

<summary>**Expand for UBNT/EdgeOS Example**</summary>

protocols { bgp 65xxx { neighbor 10.70.x.y { description n1234 nexthop-self remote-as 65yyy route-map { export nycmeshexport import nycmeshimport } soft-reconfiguration { inbound } } network 10.70.x.0/24 { } network 199.167.59.x/32 { } parameters { router-id 10.70.x.1 } timers { holdtime 15 keepalive 5 } redistribute { static { route-map nycmeshexportIspDefault } } } static { route 10.70.x.0/24 { blackhole { } } } } policy { community-list 101 { rule 10 { action permit regex 65000:1001 } } community-list 102 { rule 10 { action permit regex 65000:1002 } } community-list 103 { rule 10 { action permit regex 65000:1003 } } community-list 104 { rule 10 { action permit regex 65000:1004 } } community-list 105 { rule 10 { action permit regex 65000:1005 } } prefix-list nycmeshprefixes { rule 10 { action permit ge 22 le 32 prefix 10.0.0.0/8 } rule 20 { action permit ge 24 le 32 prefix 199.167.56.0/22 } rule 30 { action permit prefix 0.0.0.0/0 } } route-map nycmeshexport { rule 10 { action permit match { ip { address { prefix-list nycmeshprefixes } } } } rule 20 { action deny } } route-map nycmeshexportIspDefault { rule 10 { action permit match { interface eth0 } set { community "65000:1005 additive" } } rule 20 { action deny } } route-map nycmeshimport { rule 10 { action permit match { community { community-list 101 } } set { local-preference 130 } } rule 20 { action permit match { community { community-list 102 } } set { local-preference 110 } } rule 30 { action permit match { community { community-list 103 } } set { local-preference 100 } } rule 40 { action permit match { community { community-list 104 } } set { local-preference 90 } } rule 50 { action permit match { community { community-list 105 } } set { local-preference 80 } } rule 60 { action permit match { ip { address { prefix-list nycmeshprefixes } } } } rule 70 { action deny } } }

</details>

### [Mikrotik/RouterOS](https://wiki.mikrotik.com/wiki/Manual:TOC)

Mikrotik's RouterOS has its own closed source BGP implementation.

**TODO**

### [OpenBGPD](http://www.openbgpd.org/)

An example of a working configuration, abeit without BGP community rules, is available [here](https://github.com/bongozone/kibble/blob/master/src/etc/bgpd.conf).

<details>

Birdc

WireGuard is generally described on another page, here: VPN - Wireguard. This page is about what is needed to configure WireGuard for routing over the VPN; especially with a focus on OSPF.

A Note on Cryptokey Routing

It's worth a section to touch on the cryptokey routing feature of WireGuard and how it works with the mesh.

All WireGuard nodes list their peers in a configuration file. Among the peer configuration is a public key and a list of acceptable IP ranges for the peer. Once the tunnel is brought up, packets from inside the tunnel must match the IPs in the list. Packets are routed to the peer using the IP range list and encrypted for the destination peer with its specific public key. Given this, no two peers may have overlapping IP ranges. Therefore, routing through two different peers to another peer downstream, or the internet, on a single wireguard connection cannot be accomplished using WireGuard in this manner.

However, the cryptokey routing is per-interface. It's possible for an interface to allow "all IPs" ( 0.0.0.0/0 ) to/from a peer. All IPs and dynamic routing can be accomplished over a fully open WireGuard interface, but only with one other peer, and one new interface for each peer pair. Therefore for routed WireGuard connections a special configuration is required on both ends to make this possible.

Process

Each side of a routed WireGuard VPN link will need the following:

- A dedicated linux server to be the VPN router.

- A new WireGuard interface for the other side of the VPN.

- A routing daemon, probably BIRD, which can perform OSPF in PtP mode.

Dedicate Linux Server

The server on each side of the VPN will need to be configured appropriately. When handling the VPN, the server will have "two internets", and possibly other traffic direction. As such, it's important to configure the system correctly so that it does not "leak" traffic from the mesh onto the internet, vice versa, nor from any other network the same server handles. It is best to use a dedicated box, which will still need some custom "rules" to make it happen.

There are a few approaches to handle the traffic separation:

1) Multiple Routing Tables and firewalling

Linux has the ability to handle multiple routing tables. They are easy to define through a single file in the /etc/ path.

The BIRD routing daemon can also be configured to manage routes in the non-standard routing tables, so these two pieces easily work together.

One challenge with multiple routing tables is that a single device (WireGuard endpoint or Ethernet card) cannot be "tied" to a routing table. Instead, a rule (in addition to the firewall) is needed, to select the the routing table used for making a decision. These rules are easy to create but can be somewhat challenging to get right, and there is no good universal way to manage them.

Set up a new routing table for the mesh:

Edit /etc/iproute2/rt_tables and add a second table for the mesh:

$ vi /etc/iproute2/rt_tables

1 admin

2 nycmesh

3 public

Modify network file to add ip rules

Later in this write-up will describe how to add WireGuard interfaces and create their configuration in (if using Debian/Ubuntu) /etc/network/interfaces.d/*. If using the multiple routing tables method, additional lines will need to be added. They will be added in the example below, but show specificially here:

auto wg111

iface wg111 inet static

address 10.70.xx.1/31

pre-up ip link add $IFACE type wireguard

pre-up wg setconf $IFACE /etc/wireguard/$IFACE.conf

pre-up ip link set up dev $IFACE

pre-up ip route add 10.70.xx.1/31 dev $IFACE table nycmesh

pre-up ip rule add iif $IFACE pref 1031 table nycmesh

pre-up ip rule add from 10.70.xx.0 table nycmesh

post-down ip link del $IFACE

post-down ip rule del iif $IFACE table nycmesh

post-down ip rule del from 10.70.xx.0 table nycmesh

post-down ip link del $IFACE

Note the order of the pre-up and post-down rules, as it is critical. Some systems may vary in terms of the rules, depending on your distribution. Report back and help us update the document if something is off.

Note also the table nycmesh which is referenced by name. The name needs to match the rt_tables name.

Local Interfaces, to mesh-routers at the same site, will also need ip rules. For example:

# Mesh internal

allow-hotplug <Local Interface>

iface <Local Interface> inet static

address 10.69.XX.YY/16

post-up ip route add 10.69.0.0/16 dev $IFACE table nycmesh

post-up ip rule add iif $IFACE pref 1021 table nycmesh

post-up ip rule add from 10.69.XX.YY table nycmesh

post-down ip route del 10.69.0.0/16 dev $IFACE table nycmesh

post-down ip rule del iif $IFACE table nycmesh

post-down ip rule del from 10.69.XX.YY table nycmesh

2) Linux Namespaces / Containers.

Alternately, a newer Linux feature, namespaces, which is what containers are based on, can be used to provide segregation. Although containers and namespaces are intertwined, containers have a highly-simplified version of the general network namespace concept. As such, containers are not directly suitable for use as routing namespaces without much extra work. Therefore, the more challenging raw namespaces much be used.

This way has not yet been explored, it is more theoretical, so follow the first approach for now

WireGuard interface for each side

Each side's dedicated Linux server will need a new WireGuard interface for the connection. This is because each side needs to use 0.0.0.0/0 as the AllowedIPs so that any mesh address can be routed over the connection.

The connection will also need a pair of unique private IP addresses to route the internal traffic. At this time, the addresses should be taken from the NYC Mesh IP Ranges spreadsheet to ensure they are unique. Please ask Zach for an address pair.

Below are WireGuard configuration files which can be used as a basis for setting up a connect. (Be sure to replace the keys and addresses with the proper inputs).

Note the suggested device names -- It is recommended to name the interface as wgX where X is the destination node number the connection. This makes troubleshooting and configuration much easier..

Side 1 (Node 111):

# This is Node 111 Interface wg222

[Interface]

PrivateKey = ThisIsThePrivateKeyOnSide1

ListenPort = 51825

# Node 222

[Peer]

PublicKey = ThisIsThePublicKeyFromSide2

AllowedIPs = 0.0.0.0/0

Side 2 (Node 222):

# This is Node 222 Interface wg111

[Interface]

PrivateKey = ThisIsThePrivateKeyOnSide2

ListenPort = 51825

# Node 111

[Peer]

PublicKey = ThisIsThePublicKeyFromSide1

AllowedIPs = 0.0.0.0/0

Next, setup the VPN interfaces on each side to auto-start as part of the system. The below example will be for Debian/Ubuntu, but other Linux distribution are similar.

Side 1 (Node 111):

- Edit the file

/etc/network/interfaces.d/wg222.conf. Page the content as follows:

auto wg222

iface wg222 inet static

address 10.70.xx.0/31

pre-up ip link add $IFACE type wireguard

pre-up wg setconf $IFACE /etc/wireguard/$IFACE.conf

pre-up ip link set up dev $IFACE

pre-up ip route add 10.70.xx.1/31 dev $IFACE table nycmesh

pre-up ip rule add iif $IFACE pref 1031 table nycmesh

pre-up ip rule add from 10.70.xx.0 table nycmesh

post-down ip link del $IFACE

post-down ip rule del iif $IFACE table nycmesh

post-down ip rule del from 10.70.xx.0 table nycmesh

post-down ip link del $IFACE

Side 2 (Node 222):

- Edit the file

/etc/network/interfaces.d/wg111.conf. Page the content as follows:

auto wg111

iface wg111 inet static

address 10.70.xx.1/31

pre-up ip link add $IFACE type wireguard

pre-up wg setconf $IFACE /etc/wireguard/$IFACE.conf

pre-up ip link set up dev $IFACE

pre-up ip route add 10.70.xx.1/31 dev $IFACE table nycmesh

pre-up ip rule add iif $IFACE pref 1031 table nycmesh

pre-up ip rule add from 10.70.xx.0 table nycmesh

post-down ip link del $IFACE

post-down ip rule del iif $IFACE table nycmesh

post-down ip rule del from 10.70.xx.0 table nycmesh

post-down ip link del $IFACE

Bring Up the interface on each side like so:

#Side1# ifup wg222

#Side2# ifup wg111

To verify the tunnel is working, a ping from one side's address to the other side will yield a response. For Example:

#Side1# ping -c 2 10.70.89.1

PING 10.70.xx.1 (10.70.xx.1) 56(84) bytes of data.

64 bytes from 10.70.xx.1: icmp_seq=1 ttl=64 time=5.28 ms

64 bytes from 10.70.xx.1: icmp_seq=2 ttl=64 time=5.67 ms

--- 10.70.xx.1 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1001ms

rtt min/avg/max/mdev = 5.280/5.479/5.678/0.199 ms

This indicates the tunnel is setup successfully.

Routing Daemon - Bird - OSPF

The routing daemon ( BIRD is recommended ) needs to be configured to properly convey the routes to/from the network.

Remember, it is important to not leak routes in or out of the network, especially if the VPN node it to be a transit node connecting far sides of the mesh.

Additionally, BIRD's OSPF implementation is a bit finicky -- For example, it does not fully support PTMP mode, unless it communicates with other BIRD instances.

Here is a recommended BIRD configuration for a VPN connection. Key lines will be discussed in-line in the comments.

# The router id should be the Node's 10-69-net IP.

# If the node number is 1234:

# If this is the first router, the IP is 10.69.12.34

# If this is the second router, the IP is 10.69.12.134 ( more likely )

# If this is the third router, the IP is 10.69.12.234 ( rare case; Last octet needs to be <255 )

# Important: Use this same number in your local mesh interface above!

router id <Our 10-69-net IP>;

protocol device {

scan time 10;

}

# Add Local interfaces here which will connect to other local routers, such as an Omni

protocol direct {

interface "<Local Interface>";

interface "wg*";

interface "dummy*";

}

protocol kernel {

# Only add this line below if using the multiple routing table method.

# Note this is referenced by number, use the correct number table from rt_tables file

kernel table 2;

scan time 10;

persist;

learn;

metric 64;

import none;

export filter {

if source = RTS_STATIC then reject;

accept;

};

}

protocol ospf {

import all;

export none;

area 0 {

# Add this interface clause for each local interface connecting to local routers

interface "<Local Interface>" {

# Cost of 1 is safe for this because it's just a local jumper to another router which has cost

cost 1;

# Use PtP is going to a Mikrotik Router. BIRD and Mikrotik dont speak the same PTMP

# Use PtMP if going to other BIRD instances

# Use Broadcast for special scenarios that make sense, such as at a supernode.

type ptp;

# Add Neighbors via IPs on that interface.

neighbors {

<Local Neighbor>;

};

};

# Make sure to use the "wgXXXX" interface for the remove node.

# Add one of these clauses for each wireguard connection

interface "wg<Other Node Number>" {

# Cost 15 is for a Really Good WireGuard connection. Cost of 40 is more typical for a VPN

cost 15;

# PTMP for other BIRD instances. If WireGuard it's gonna be linux so BIRD

type ptmp;

neighbors {

<Other WireGuard Node>;

};

};

};

}

Testing the configuration

Once everything is running, the WireGuard tunnel is operating, and BIRD is started, check to see if everything is connecting properly. It may be a good idea to not initially connect other mesh routers to the VPN router until after you verify it is communicating properly and not leaking routes.

Check BIRD functionality.

Start BIRD and check the OSPF protocol, see below:

# birdc

bird> show protocol

name proto table state since info

device1 Device master up 2020-03-14

direct1 Direct master up 2020-03-14

static1 Static master up 2020-03-14

kernel1 Kernel master up 2020-03-14

ospf1 OSPF master up 2020-03-14 Running

bird> show protocol all ospf1

name proto table state since info

ospf1 OSPF master up 2020-03-14 Running

Preference: 150

Input filter: ACCEPT

Output filter: REJECT

Routes: 464 imported, 0 exported, 461 preferred

Route change stats: received rejected filtered ignored accepted

Import updates: 64065 1 0 0 64064

Import withdraws: 16846 0 --- 2 16845

Export updates: 64067 64059 8 --- 0

Export withdraws: 16845 --- --- --- 0

bird> show route export ospf1

bird>

Above, we see show protocol lists ospf1 as Running. This means is has successfully connected to other nodes and is functioning properly. It may also say alone which could indicate a problem, or, could mean it has not yet connected ( it maybe take up to one minute ).

Next we see that inspecting the ospf protocol more, we have received 464 routes, and are exporting 0 routes This is the important part. In thie case exporting means we are adding some routes to the mesh. We may want to, for special cases, at this point, but if the VPN router is pass-through, then it should say 0 as a best practice.

Lastly, if we are exporting routes, we can check them with show route export ospf1. In this case we aren't so there's no problem. If we do, we can fix the problem with this knowledge.

There are many other commands within BIRD to help debug, check them out.

Networking 101 Training Classes

We have presented a few classes on network concepts and training. Here is a list of slides and videos we have made for reference.

-

Networking 101 version 1, by Zach Giles, Early 2017 Slides

-

Networking 101 version 2, by Zach Giles, December 2018 Slides, Video

DNS

DNS Infrastructure

NYCMesh maintains an internal DNS with the "fake" top-level domain (TLD) of .mesh ( dot mesh ).

Through this, services can be hosted, internal sites, etc.

Use 10.10.10.10 for your DNS server.

DNS configuration

The DNS is hosted using standard DNS zones which are made available through the Knot Resolver and Knot DNS Server.

The zone and scripts are made available via git. Once the master branch is updated, the DNS servers will periodically update and refresh their configuration.

Git Repo: github.com/nycmeshnet/nycmesh-dns

Git Repo: NYCMesh in-mesh git ( does not exist yet )

Anycast DNS and IPs

Anycast

The DNS system is available through a "trick" called Anycast. Anycast is the number one way DNS is provided on the main public internet. With anycast, many DNS servers all present the same virtual IP. They announce this IP in the routing table ( mesh routing table, BGP or other protocol ). With this, the clients believe they all have a very short route to the same network, but in fact it is a copy of the same service running many times with the same configuration. Any of the services may answer the request equally well. Reply packets are sent via the normal means.

IPs

10.10.10.10 - Resolving DNS endpoint for the mesh ( Use this one )

10.10.10.11 - Authoritative endpoint for dot-mesh TLD.

199.167.59.10 - Public DNS Resolver for anyone in the world. No Logs, No filtering.

The reason for two endpoints rather than one is to enable resolving the dot-mesh TLD separately. In-fact, the caching resolver forwards to the dot-mesh TLD server for dot-mesh addresses.

This also allows more than one node to host a resolver, or, a dot-mesh DNS server, or both.

Top Level Domains

.mesh- Internal dot-mesh domain for NYC Mesh..mesh.nycmesh.net- Public version of the same domain. Equivilent of.mesh. Available on the entire internet

Running a DNS Server

This is a Work in progress

Today there is a DNS server run at Supernode 1 as a VM. More are planned. It would be nice if at least every supernode ran a DNS clone.

In the future it is expected that anyone who wants to run one can do so using a Docker container etc.

To get a jump-start on this, check out the Git repo an take a look at the README. It's an ever-changing process.

Hubs

Hubs provide connectivity for many nodes in a neighborhood. They come in three different sizes: small, medium, and large. These categories are not strict, and you will find many variants in the field. You can modify your hub to suit your needs and the needs of other mesh members in your area.

Small

The smallest possible hub is a standard node, an OmniTik 5 POE ac, with an added LiteBeam AC connected to a sector antenna at another hub or supernode to give it a reliable uplink connection. The OmniTik can then serve nearby nodes that have OmniTiks or other compatible equipment using WDS.

For a more robust small hub that can serve more nodes, you can add one or more LiteAP AC sector antennas. Each LiteAP has a 120° coverage area, so three will cover a full 360° around the building. A hub with three LiteAPs and a LiteBeam uses four of the OmniTik’s five ethernet ports. The fifth can serve your apartment.

There are many variants on a small hub. If part of your roof is blocked, you can use fewer sector antennas, which leaves more ports for connecting your neighbors to the mesh. You can make a point-to-point connection with your LiteBeam or with an SXTsq 5 to get a higher bandwidth link to another hub. Adding an outdoor switch such as a NanoSwitch, EdgePoint , EdgePoint R8, netPower Lite 7R or netPower 15FR will give you more Ethernet ports to serve more apartments. If nobody in your building needs service, you can have three sectors and two point-to-points or three point-to-points and two sectors.

Small hubs can use the standard OmniTik config. Medium and large hubs require custom configuration.

Medium

A medium hub can support more than five radios and forward more traffic than a small hub, without requiring the building to provide indoor space for equipment. It consists of a weatherproof enclosure on the roof with AC power and a non-wireless router. The ethernet ports on the router are used for sector antennas and point-to-point links – which can be either Ubiquiti airMAX radios like the LiteBeam AC or higher capacity airFiber.

Medium hubs are somewhat rare. It’s difficult to find routers with enough Power over Ethernet ports to power all the antennas, and putting a large number of PoE injectors in a weatherproof enclosure can be impractical.

Medium hubs can support 7-8 antennas on a budget, ideally for less than $1,000. The RB1100AHx4 is a router that can be used at medium hubs.

Large

A large hub is both more flexible and more expensive than a medium hub. It requires an indoor panel with AC power. The wired router is mounted on the panel indoors, and an outdoor PoE switch like the EdgePoint S16 is mounted on the roof. The router and the switch are connected using either copper or fiber. Having a separate router and switch lets you use a router with fewer ports, which gives you a lot more options for which router to use.

Equipment at the panel uses AC power. The outdoor switch uses DC power brought up to the roof from a power supply like the EP-54V-150W, which is mounted at the panel. The antennas are powered by the outdoor switch. Power usage can be in the hundreds of watts.

Routers used at large hubs include models from the Mikrotik CCR1009 series and CCR1036 series, as well as standard PC servers running Linux. Large hubs may have more than one router.

Supernode

A supernode is a BGP-capable large hub. It’s the interface between the mesh and the public internet.

Supernodes are located in data centers so that they can be connected to an internet exchange point or directly connected to other ISPs. These interconnections are mostly made with fiber. Supernodes exchange routes with ISPs using BGP. They perform NAT to translate addresses from the mesh’s private 10.0.0.0/8 network into public IP addresses, and inject a default route into the mesh so that nodes on the mesh know how to reach the internet.

Backbone nodes (sometimes still called Supernodes)

A backbone node is a supernode, but with no radio on the roof. It is attached to other hubs with fiber.

The buildings with the most abundant, cheapest opportunities to interconnect with other ISPs are not always the tallest buildings with the best lines of sight to surrounding neighborhoods. Building a backbone node at a location with inexpensive interconnection can save money by converting expensive supernodes into large fiber-attached hubs.

Fiber-attached hubs

A fiber-attached hub is a hub of any size connected by fiber to a supernode or backbone node.

Currently, hubs are connected to other hubs and supernodes using a combination of point-to-point and point-to-multipoint radio links. Radio is susceptible to signal loss caused by rain, snow, and interference from other nearby radio transmitters. The more radio links your data has to traverse before it reaches a wired connection, the higher the odds that you experience service loss.

Ideally, every hub would be a fiber-attached hub. This would mean that everyone with line of sight to a hub would only have one wireless hop that’s subject to interference and signal loss, substantially increasing reliability. To do this, the mesh would need to lease or get donations of dark fiber that run underground between each hub and a supernode or backbone node.

IP Mapping Method

Over time, the terminology for the numbers assigned to buildings and members has evolved.

Originally a person registering was issued automatically a “Node Number”. The Node Number was then used to assign an IP address range via an algorithm to the set of devices to be installed on the building. In addition this was a bit confusing since there could be more than one member in the same building to be connected to the same set of roof devices - so the Node Number of the first installed member was used to determine the IP range. The second member connected to the same set of devices did not get their Node number used. As the IP address range were assigned based on the Node Number a big number of IP address ranges where "unused" and thus "wasted". Same for members registering but not able to connect yet, a Node Number was attributed but not used.

Since 2020 we use the terms “Install Number” (#) to refer to the number assigned to the member when a member joins (in place of Node Number) at the same time it was decided that registration getting a numerical above 8000 as (#), and needing a set of roof devices (building not yet connected to NYC Mesh) were going to get a Network Number (NN), a number that has not been used so far.

The “Network Number” (NN) refers to the number assigned to the devices on the roof. When a member joins a (NN) may or may not already exit for the building. A (NN) gets assigned to the devices when they get actually installed.

Note: The (NN) is not assigned to a member but to a set of devices installed (or to be installed) on the building. The member install number (#) is then linked to the (NN) where it connects to, and noted like #123 NN456 (Install number #123 connected to the devices at the node NN456).

Assigning the NN only at install time preserves address space and allows us to reclaim unused Network Numbers (e.g., if an install is abandoned for a period of time).

As we completed more and more installs, we needed a way use the IP address ranges that were unused.

The algorithm for mapping IP addresses to Node Number and later to Network Numbers (NN) was the result of a lot of brainstorming in the #architecture channel on Slack.

Since 2019 we have implemented the “Human Easy Split” strategy (Strategy 3 below), allowing up to 25,599 Network Numbers to be assigned.

Hi #architecture,

I'd like to propose an algorithm for making a Node Number to IP mapping programmatically.

This is not a *new* idea. Several of us have thought about it and taken a stab at it, and I'd like to officially see if we can all agree on one.

Also, the below ideas represent generally a few models of what can be done. There are infinite variations, but the generally follow the below pattern.

The idea:

- For a given Node Number ( X ), and a given IPv4 Slash 16 address space ( ex: 10.0.0.0/16 ), Get a unique IP in the range for that node.

- The mapping is generic. It could be used as a node identifier, a peering LAN, link local, public, or private.

- If we can agree, it will be much easier to peer, login to node by name/number, and generate and automate node setup without a central authority.

Use cases:

- We could for example give each node an identifier so we can always find the node and it will announce itself

- We could OSPF peer without needing additional filtering or mapping. Config can be generated

- We could BGP peer any to any without filtering, just by typing the node number of the peer

- WDS, litebeam, and wired connections can be used simultaneously without doubling the number of addresses needed each time.

For the below, two numbers will be given, Y and Z, they can be seen as 10.0.Y.Z for mapping in to the /16 block

---

Strategy 1 - As Needed:

For each node that needs an address, pick the next unused address and assign it.

This is what we do now.

Ex:

node 1 = 0 1

node 500 = 0 2

node 392 = 0 3

Advantages:

- Can fit the most nodes in the space as possible (65K)

Disadvantages:

- Need a huge lookup table

- Supereasy to overlap and make a mistake with another node

---

Strategy 2 - Straight Allocation:

For each node, split the upper and lower bits of the number across the octets, resulting in each 256 node rolling over to the next /24.

Ex:

node 10 = 0 10

node 200 = 0 200

node 256 = 1 0

node 500 = 1 245

node 2218 = 8 178

node 5000 = 19 155

node 7000 = 27 115

node 10000 = 39 55

node 11000 = 43 35

Advantages:

- Can fit the most nodes in the space as possible (65K)

Disadvantage:

- Not human understandable, will need to reference a calculator.

- Only one node per site is assumed

---

Strategy 3 - Human Easy Split:

For each node number, split the number phyiscally in two parts and map the last two digits to the last octet, and the first two ( or three ) digits to the second-to-last octet

If a node will have more than one router, increase the last octet by 100. Since we only use 0-99, 100-199 can be secondary for all nodes without hurting the human readability.

This also reserves an extra 55 IPs ( 200-255 ) for every 100 nodes as more-than-secondary IPs that would have to be assigned statically but unlikely to hurt or overlap.

Ex:

node 10 = 0 10

node 200 = 2 00

node 256 = 2 56

node 500 = 5 00

node 2218 = 22 18

node 5000 = 50 00

node 7000 = 70 00

node 7998 = 79 98

rtr 1 79 198

node 7999 = 79 99

node 8000 = 80 00

node 8001 = 80 01

node 10000 = 100 00

node 11000 = 110 00

node 25599 = 255 99

Advantage:

- Human understandable

- Built-in allowance for more than one router per node

- Can be calculated using string tools in addition to math tools

Disadvantages:

- Inefficient allocation, Only 25599 nodes can be used

- No logic for more than 2 routers per site ( will need to manually assign )

---

Strategy 4 - Bit Shift with span:

For each node number, map it to the linear numbers in the range, but shift the bits up two so that each node gets 4 addresses. This will allow for 4 routers at each site, and create a virtual /30 for each site ( 4 addresses )

Ex:

node 1 = 00000000 000001XX

node1rtr1 00000000 00000100

node1rtr2 00000000 00000101

node1rtr3 00000000 00000110

node1rtr4 00000000 00000111

node 2 = 00000000 000010XX

node1rtr1 00000000 00001000

node1rtr2 00000000 00001001

node1rtr3 00000000 00001010

node1rtr4 00000000 00001011

node 10 = 0 40

rtr 1 0 40

rtr 2 0 41

rtr 3 0 42

rtr 4 0 43

node 11 = 0 44

rtr 1 0 44

rtr 2 0 45

rtr 3 0 46

rtr 4 0 47

node 200 = 3 44

node 256 = 4 16

node 500 = 7 236

node 2218 = 35 52

node 5000 = 79 92

node 7000 = 111 28

node 7998 = 126 240

rtr 1 126 241

node 7999 = 126 244

node 8000 = 126 248

node 8001 = 127 0

node 10000 = 158 184

node 11000 = 174 152

node 16118 = 255 212

ndoe 16127 = 255 248

Advantage:

- Very efficient allocation of space

- Support for four routers per site

- No manual mapping will be needed even for special cases

- Even though the mapping is complicated, all routers at a site will be adjacent numerically.

Disadvantage:

- Not human readable, even more complicated mapping that will require a calculator.

- Not as many nodes as other strategies, only 16128 nodes can be assigned.

Mesh

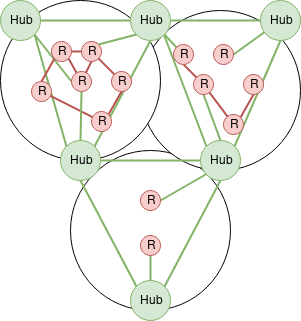

NYC Mesh is designed and run as a mesh network. As a mesh, various nodes connect to each other in a non-hierarchical way, with traffic flowing in either direction, and rerouting traffic as nodes fail.

As with all mesh networks, we must balance between becoming too much of a "star" topology vs a "mesh" topology.

Neither is fully practical -- Not literally every node next to each other can all connect to each other, nor can we sustain unlimited nodes connecting to one rooftop.

Design

We propose a design to practically cover our city ( New York City ) which features many tall buildings, regions of short buildings, multiple islands, and a dense urban population. We also want to be able to take advantage of free resources and natural features as they become available.

In this design, we propose:

- A number of community-operated sites which will be in good high location to support the mesh backbone. ( Sometimes called high-sites. )

- Rooftop sites that connect to each other and to one or more hub-nodes.

- Two mesh layers:

- One mesh network between all supernode / hub nodes. This mesh is "the full view" and can "express" you between any two neighborhoods.

- A rooftop-to-rooftop mesh network within neighborhoods.

- The in-neighborhood meshes will collect all local routes and present them to the hubs.

- Hubs will present all routes from other neighborhoods to each other and to other neighborhoods.

Example Diagram

Mesh argument

A number of people have argued for/against this approach, suggesting this is not a valid mesh layout. While it's true that this is not a traditional "by-the-book" mesh network, it seems mesh communities are all about trying out new things.

Technically we could have used one mesh standard across the entire network, however, a few concerns led us the other direction:

- Some longer running mesh networks have problems moving to newer protocols once too many nodes are deployed. We wanted to avoid this problem by creating smaller interconnected meshes.

- We had difficulting finding cheap and available quality routers to continue building the mesh. Additionally, open firmware project were undergoing internal shifts. Using standard / multiple protocols we can leverage off-the-shelf routers with multiple radios and high speeds; and mix with Libre hardware when available.

- We wanted to support experimentation with multiple protocols in different neighborhoods and interconnectivity to other mesh projects.

Our design is not anti-mesh, but rather embraces the fullness of hardware diversity and interconnectivity.

NTP

NTP Infrastructure

History: Over the years it has been evolving, changing. We used to use outside NTP servers, from the WWW , then one, and later a second member provided NTP. One via a Stratum-1 server (Antenna getting PPS (Pulse-Per-Second) via two u-blox receivers) the other one as a stratum-2 (chrony). Those 2 members moved on and another member started a new project at his "home lab" to provide NTP stratum-1. We have now setup some NTP servers using chrony and we have the member's project online (GPS PPS NTP/chrony server with a NEO 7M GPS unit on a raspberry Pi.)

Use 10.10.10.123 as your NTP server address.

What is NTP and how did it started?

The Network Time Protocol is a "network" protocol for devices to synch their clocks. See Wiki

How it all started, how does it works, with Dr Julian Onions (University of Nottingham, UK) implementing this after meeting the godfather of Internet time, Dave Mills! Video interview

IP

10.10.10.123 - NTP for the mesh ( Use this one )

NTP stats and status

Here are some stats on the NTP servers:

NTP Server at SN1 (as an example).

Alternatives

There are many NTP servers on the Net, google offers it, some hardware maker such as Ubiquiti, etc..

Peering

NYC Mesh operates AS395853

Our peering Policy is Yes

Please contact us to peer with our network.

Note this this is our Public ASN, not the Mesh Network itself.

This community-run public network supplies NYC Mesh with net-neutral internet connectivity to support the community. If you would like to join the Mesh Network, please visit our Join Page make use of this network.

Peering Policy

- NYC Mesh has an open peering policy.

- We have no requirements in terms of traffic, size, support/SLA, etc.

- We operate both IPv4 and IPv6. Peering via both protocols is appreciated.

Locations

| Building | Address | Ports |

|---|---|---|

| Sabey | 375 Pearl St, New York, NY | 1G / 10G |

Exchanges

| Exchange | City | IPv4 | IPv6 | ASNs | Routes | Speed |

|---|---|---|---|---|---|---|

| DE-CIX NY | New York, NY | 206.130.10.151 | 2001:504:36::c2ab:0:1 | 105 | ~122K | 1G |

Peering Data

ASN: 395853

IRR AS-SET: AS-NYCMESH

Peering Contact: peering@nycmesh.net

Recommended Max Prefix IPv4: 10

Recommended Max Prefix IPv6: 10

PeerDB Page: https://as395853.peeringdb.com

As we are a non-profit, please consider providing as many routes as possible, including upstream or other routes.

Peers

We have direct peering sessions with the following networks

Thank you to those who have peered!

| ASN | Organization | Exchange |

|---|---|---|

| AS42 | WoodyNet / Packet Clearing House | DE-CIX NY |

| AS714 | Apple Inc. | DE-CIX NY |

| AS3856 | Packet Clearing House | DE-CIX NY |

| AS6939 | Hurricane Electric, Inc. | DE-CIX NY |

| AS9009 | M247 Ltd | DE-CIX NY |

| AS15169 | Google LLC | DE-CIX NY |

| AS20940 | Akamai International B.V. | DE-CIX NY |

| AS27257 | Webair Internet Development Company Inc. | DE-CIX NY |

| AS29838 | Atlantic Metro Communications, LLC | DE-CIX NY |

| AS32217 | GPIEX INC | DE-CIX NY |

| AS33891 | Core-Backbone GmbH | DE-CIX NY |

| AS46450 | Pilot Fiber, Inc. | DE-CIX NY |

| AS53988 | Digi Desert LLC | DE-CIX NY |

| AS54825 | Packet Host, Inc. | DE-CIX NY |

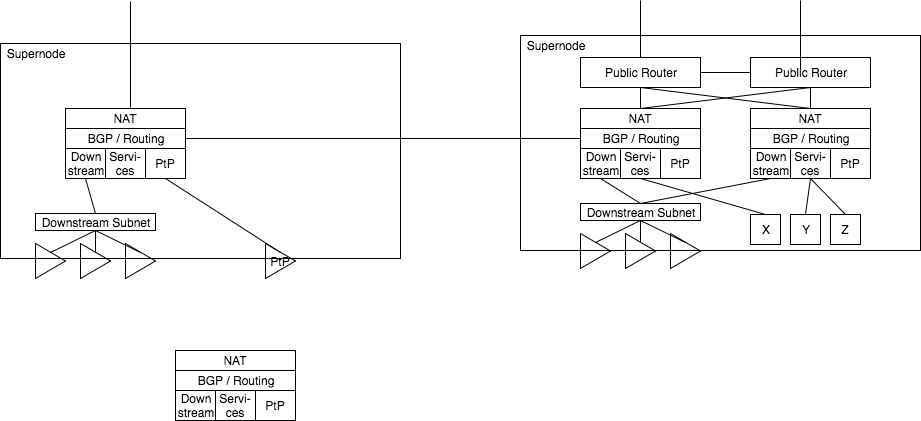

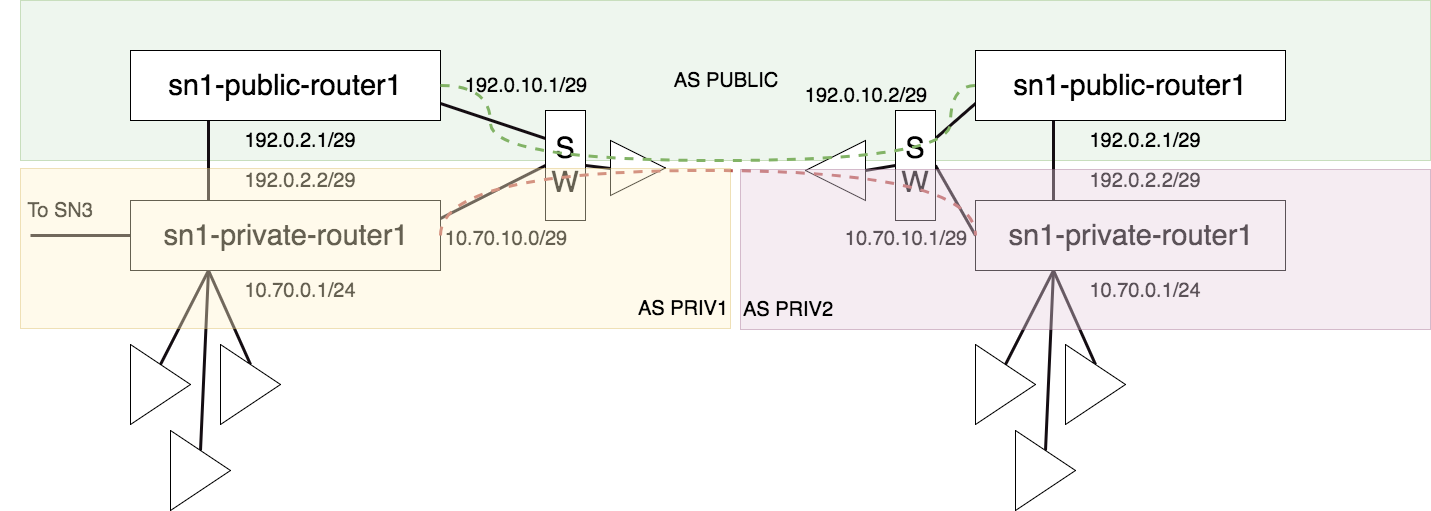

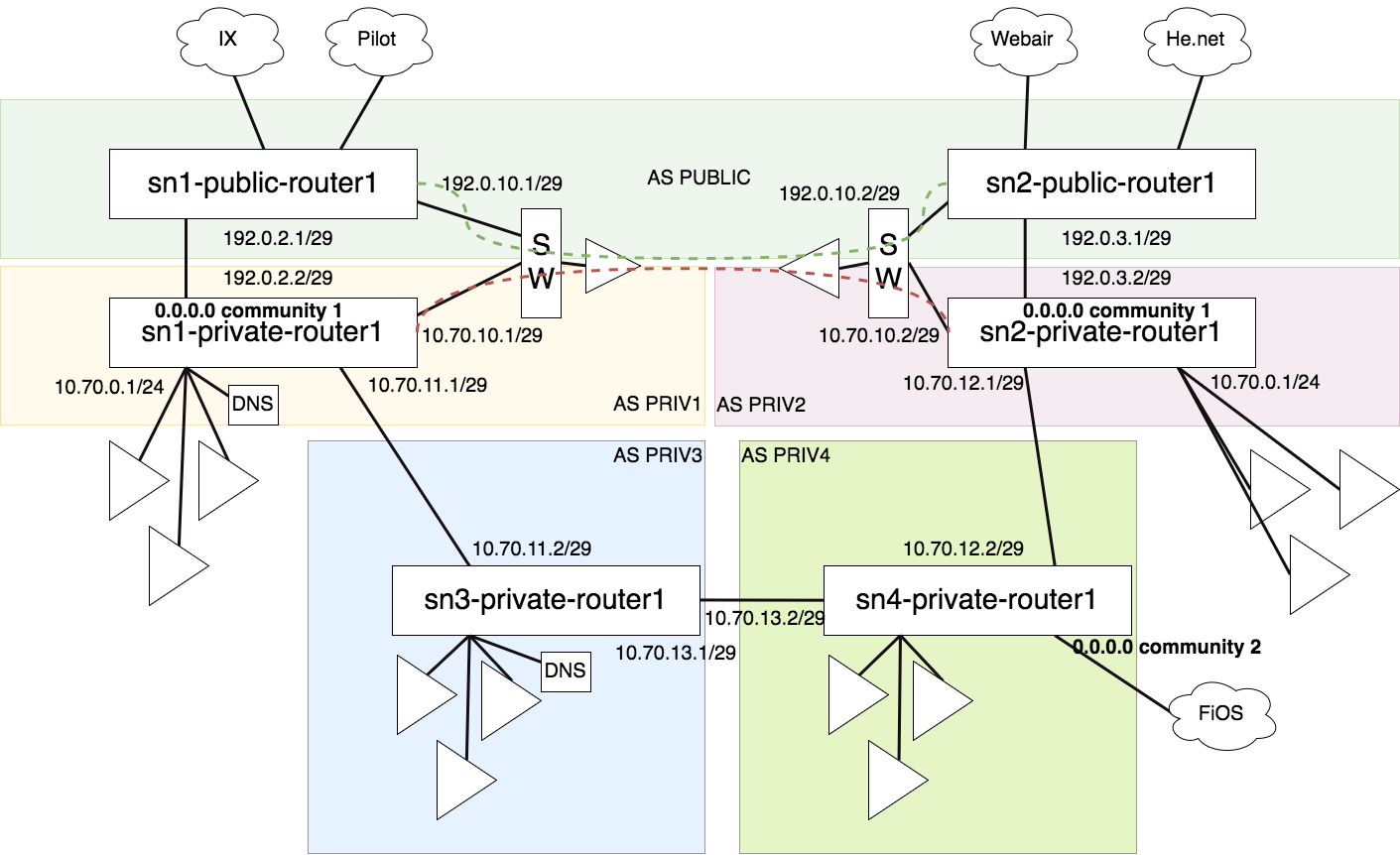

Supernode-Architecture

Goals of this documents

- Supernode routing / goals

- Supernode sample architectures

- Plan to get us to this architecture

Supernode routing / goals

"If you can get to a supernode, you can get to the rest of the mesh ( and the internet )."

- A supernode should be the regional authority on how to get to its region, other regions, and the internet.

- Translate region-local rooftop-to-rooftop protocol routes to standard routes for other regions and vice versa.

- All supernodes should have a full view of all mesh routes.

- Each supernode will get a private ASN in a sequential range with its supernode designation number.

- All routers at the supernode should use BGP confederation and act as a route-reflector.

- A supernode might also run mesh-services that will be announced to the mesh as routes.

- A supernode might provide internet access. If it does, it must:

- Announce a default route prefix internally.

- Translate any private IPv4 to public using NAT. May be CGNAT with a pool of addresses, or single IP

- Translate a specific private IPv6 prefix to public using NPTv6.

- Must tag internet connecting with their source using BGP communities.

- Must not log data nor filter data / routes.

- A supernode might participate in the NYCMesh Public Backbone by using BGP Peering if capable. If so, it will do so in accordance with that architecture.

- Links between supernodes will be routed Layer3 using an IPv4 /24 and IPv6 /64. There will be one VLAN for each link ( site-to-site ). VLANs should not be shared across multiple sites. ( Except in extenuating circumstances or testing crazy things. )

- The reason for this is to allow for multiple routers on each end if appropriate.

Supernode layout

Each supernode has one or more local routers. Each local router has some local subnet, for downstream sector antennas, service nodes, and PtP links. Each router speaks BGP to some of these services and also to neighboring supernodes.

Routers present internet access by passing a NAT layer and consuming some public IP addresses; For example, in a scenario where a single IP connection is handed off, such as Verizon FiOS, the IP is consumed by the NAT services directly.

If the supernode is also a Public Backbone routers, one or more backbone routers provide public access connectivity, with some connectivity being presented to the supernode routers for NAT consumption.

Downstream access can be presented via one or more subnets, one or more antennas, in a mixed fashion for whatever is best for that region.

Each supernode has one or more local routers. Each local router has some local subnet, for downstream sector antennas, service nodes, and PtP links. Each router speaks BGP to some of these services and also to neighboring supernodes.

Routers present internet access by passing a NAT layer and consuming some public IP addresses; For example, in a scenario where a single IP connection is handed off, such as Verizon FiOS, the IP is consumed by the NAT services directly.

If the supernode is also a Public Backbone routers, one or more backbone routers provide public access connectivity, with some connectivity being presented to the supernode routers for NAT consumption.

Downstream access can be presented via one or more subnets, one or more antennas, in a mixed fashion for whatever is best for that region.

Plan to get us to this new architecture

- In our current setup, we present a single subnet directly from our Public Router.

- We should create a second router at Supernode 1 as the supernode router.

- From this new router, we should present several subnets:

- One to Supernode 2

- One to the internal out of band routers

- One or more for downstream client access

- This router should be able to perform NAT for any subnet using a public IP pool

- We should present the Supernode 2 access subnet via a VLAN over the AirFiber 24

- From this new router, we should present several subnets:

- In Brooklyn, we should accept the additional VLAN over the AirFiber and connect it with the router part of the EdgePoint router.

- From there we can setup BGP to accept the routes and also present a default route to Manhattan

- Brooklyn can make several subnets:

- One for internal out of band router access

- One or more for downstream client access

- We can begin to shorten the lease time on the public IPs, switching clients over to private IPs slowly.

- In manhattan, we can begin to use private IPs and public IPs mixed immediately. This subnet will propagate to Brooklyn as well temporarily in-lieu of the Public IPs. As private IPs are found to have no problem, the local private IP subnet can be introduced.

Supernodes interconnecting examples

Vpn

The NYC Mesh Virtual Private Network (VPN) is a system that enables a computer that is physically disconnected from the rest of the NYC Mesh network (e.g., because it is too distant from existing nodes) to access the network. Put another way, it extends the NYC Mesh network to computers that are not physically part of the mesh. This is used for a number of different purposes, including to provide access to intra-mesh services, ease new node installations, bootstrap new neighborhoods, and more.

A VPN (virtual private networking) is a virtual network, creating a tunnel, or express route, across another network, such as the internet. Within the tunnel the network has no knowledge of the outside network, making it appear as if the entire network is seamless and directly connected. The outside network also has no knowledge of the inside network, making it a safe way to traverse dangerous or unknown networks.

VPN Infrastructure

NYC Mesh maintains some common VPN infrastructure for use by mesh members.

Please feel free to use the VPNs. However, please note that NYC Mesh is not a commercial VPN provider or reseller, nor are we trying to achieve an Internet-based darknet. The VPN service is subject to change and/or breakage at any time. Do not rely on NYC Mesh's VPN service as your primary or critical VPN provider. Also, as with all NYC Mesh resources, do not abuse the VPN service or the access it provides.

When might you use a VPN

We run a VPN service for a variety of reasons. You might want to use the NYC Mesh VPN to:

- tie distant neighborhoods together. For instance, if a distant part of the mesh is too far away from the other nodes to make a reliable physical connection, you can use the VPN to (logically) tie the mesh back together and make intra-mesh services available over the public Internet to the physically separated part of the NYC Mesh network. This isn't required, of course; the distant portion of the mesh can have its own connection to the Internet (an exit node) and maintain itself locally, but without a VPN connection it won't be able to access the rest of the mesh.

- connect your laptop to NYC Mesh over the public Internet. For example, if you are working in a coffee shop but need access to the mesh in order to conduct tests or develop and maintain mesh-specific features, you can connect to the mesh via the VPN. As another example, you can masquerade to Web sites and public Internet services as a NYC Mesh user, so that you can see the Internet from NYC Mesh's "point of view."

- configure networking equipment during an NYC Mesh installation. An installation may involve connecting to another node to configure it, which can be difficult to accomplish without already being on the mesh. By using a VPN connection, an install team member can temporary become part of the mesh prior to the completion of the physical installation.

VPN types and endpoints

Each supernode provides a few VPN options, depending on the supernode's locally available hardware. You may connect to any or all of the supernodes and, in some cases, depending on your hardware, you can even connect to multiple supernodes simultaneously. For the documented currently available endpoints, see § endpoints, below.

Choosing a VPN endpoint

Although you can generally use whatever endpoint you wish, you should consider a combination of factors for the best experience using the NYC Mesh VPN service. These considerations include:

- VPN software support on your computer.

- VPN protocol support provided by the NYC Mesh VPN endpoint.

- Your goal in connecting via the VPN; do you intend to connect a single device (laptop, phone, home router, etc.), or will you do meshing?

Based on these decisions, you will need to choose a different protocol and setup procedure. If your computer does not support any of the VPN protocols our endpoints do, you may need to connect using a different laptop.

Endpoint types

The NYC Mesh VPN service currently offers VPN connectivity using the following protocols.

Each page will list endpoints available for that protocol.

L2TP/IPsec

L2TP/IPSec is a common general-purpose VPN protocol that work with most platforms. For example, computers running Windows, macOS, iPhones, and Android devices all support this type of VPN out-of-the-box. This type of VPN is a little bit oldschool, in that it is typically found in enterprise corporate environments, which is part of what makes it so ubiquitous.

For this reason, we have decided to provide and endpoint of this protocol.

For configuration instructions, please see our L2TP/IPsec page.

WireGuard

WireGuard is a modern type of VPN that was originally developed for Linux. There are now versions of the WireGuard VPN software available for recent Windows, macOS, iPhone, and Android devices as well; however, some older versions of those platforms may not support Wireguard. WireGuard is also typically fast, but is a bit more challenging to set up.

Other VPN types

At this time, we have not set up other VPN types, but we would like to in our spare-time. Other VPN protocols we are considering include: OpenVPN, and VTrunkD.

VPN - L2TP/IPsec

L2TP/IPSec is a common general-purpose VPN protocol that work with most platforms. For example, computers running Windows, macOS, iPhones, and Android devices all support this type of VPN out-of-the-box. This type of VPN is a little bit oldschool, in that it is typically found in enterprise corporate environments, which is part of what makes it so ubiquitous. For this reason, we have decided to provide and endpoint of this protocol.

Technically speaking, it is a combination of L2TP and IPsec protocol standards. The former protocol (L2TP, the Layer 2 Tunneling Protocol) carries a "call" over the Internet, while the latter protocol suite (IPsec, Internet Protocol Security) encrypts the call and authenticates the participants. Although it's possible to use either protocol without the other, L2TP and IPsec are most often used together. This is because L2TP creates a connection but does not secure the connection, while IPSec protects traffic but does not itself create a network connection to carry traffic.

L2TP/IPsec VPNs are often slower than more modern protocols because the implementation on smaller routers is usually implemented in software which makes heavy use of the router's CPU. On the other hand, more expensive routers often have a custom chip to speed-up IPSec encryption, actually making L2TP/IPsec the fastest choice in certain cases.

The best performance you can reasonably expect from on small routers is around ~100Mbps.

Device support

The L2TP/IPsec protocol enjoys very wide support across platforms and vendors.

- Windows devices: Should work - Guide Here

- Apple devices: iPhones, Mac laptops, natively - iOS Guide Here, macOS Guide Here

- Android devices: Most yes - Guide Here

- Linux: Yes, complicated without GUI, works well with Network Manager - Guide Here

- Ubiquiti EdgeOS: Yes, but slow - Guide Here

- Mikrotik devices: All ( various speeds ) - No Guide Yet

- OpenWRT: Should work - No Guide Yet

Endpoints

This section provides connection information for NYC Mesh VPN endpoints that use L2TP/IPsec.

Supernode 1

- Server domain name:

l2tpvpn.sn1.mesh.nycmesh.net - Supported connection methods:

- Anonymous:

- Username:

- Password:

- Pre-shared key/secret:

- OSPF Node-Peering (Same, connect as above, use OSPF afterwards.)

- Anonymous:

Supernode 3

- Server domain name:

l2tpvpn.sn3.mesh.nycmesh.net - Supported connection methods:

- Anonymous:

- Username:

- Password:

- Pre-shared key/secret:

- OSPF Node-Peering (Same, connect as above, use OSPF afterwards.)

- Anonymous:

Account

N.B. Your account will be specific to SN1 or SN3 - note in the comments if you have a preference

Connection Guides

Please follow the below connection guides for each platform.

Windows 10

Expand to view instructions...

- Click on Start (Title menu) and type VPN

- Click on on Change Virtual Private Networks (VPN)

- Click on the plus button (Add a VPN connection)

- Choose VPN provider (Microsoft by default)

- Connection name (Name it whatever you want)

- Server name or address

l2tpvpn.sn1.mesh.nycmesh.net - VPN Type: L2TP/IPsec with pre-shared key

- Pre-shared key:

nycmeshnet - Type of sign-in info: User name and password

- Username:

your personal user name - Password:

your personal password - Check box to remember password so you don't have to type this everytime

- Click save

- Click on newly created VPN connection and click connect

macOS

Expand to view instructions...

See Apple Support: Set up a VPN Connection. Be sure to use the appropriate authentication credentials for your connection. For an anonymous connection, enter your personal user name as the "account name" and your personal user name in the User Authentication's "password" field and 'nycmeshnet' the Machine Authentication's "Shared Secret" field.

Apple iOS

Expand to view instructions...

These instructions refer to Apple-branded handheld devices such as the iPhone and iPad.

- Go to Settings

- Tap on VPN

- Tap on Add VPN Configuration

- Tap on Type and choose L2TP

- Description (Anything you want)

- Server:

l2tpvpn.sn1.mesh.nycmesh.net - Account:

your personal user name - Leave RSA SecurID off

- Password

your personal password - Secret:

nycmeshnet

Android

Expand to view instructions...

See How-To Geek: How to Connect to a VPN on Android § Android’s Built-In VPN Support.

GNU/Linux - GNOME/Network Manager

Expand to view instructions...

Using GNOME/Network Manager:

- Make sure you have the L2TP/IPsec NetworkManager plugin installed (

NetworkManager-l2tp-gnomeon Fedora) - Add a new VPN of type 'Layer 2 Tunneling Protocol'

- Gateway:

l2tpvpn.sn1.mesh.nycmesh.net - Username:

your personal user name - Password:

your personal passwrod(you may have to click a question mark on the right of the textbox to allow saving the password) - Click "IPsec Settings"

- Check "Enable IPsec tunnel to L2TP host"

- Pre-shared key:

nycmeshnet - Save & Connect

Ubiquiti EdgeOS

Expand to view instructions...

This example EdgeRouter configuration will let clients on your LAN reach the mesh. It requires at least EdgeOS 2.0.9 which adds support for connecting to L2TP/IPsec VPNs. You will need to be familiar with the EdgeOS CLI.

Note: there is a bug in EdgeOS's PPP configuration that prevents EdgeRouter from connecting to the NYC Mesh VPN. Make sure to configure the workaround scripts on your EdgeRouter.

Here is a minimal configuration for connecting to the Supernode 1 VPN.

First, enter configuration mode:

configure

Then, configure the L2TP client interface (you should be able to copy and paste all the lines in this block at once):

set interfaces l2tp-client l2tpc0 server-ip l2tpvpn.sn1.mesh.nycmesh.net

set interfaces l2tp-client l2tpc0 description "NYC Mesh VPN (SN1)"

set interfaces l2tp-client l2tpc0 authentication user-id 'your personal user name'

set interfaces l2tp-client l2tpc0 authentication password your personal password'

set interfaces l2tp-client l2tpc0 require-ipsec

Next, configure the IPsec tunnel:

set vpn ipsec esp-group NYC_MESH mode transport

set vpn ipsec esp-group NYC_MESH pfs disable

set vpn ipsec esp-group NYC_MESH proposal 1 encryption aes256

set vpn ipsec esp-group NYC_MESH proposal 1 hash sha1

set vpn ipsec ike-group NYC_MESH dead-peer-detection action restart

set vpn ipsec ike-group NYC_MESH proposal 1 encryption aes256

set vpn ipsec ike-group NYC_MESH proposal 1 hash sha1

set vpn ipsec site-to-site peer l2tpvpn.sn1.mesh.nycmesh.net description "NYC Mesh VPN (SN1)"

set vpn ipsec site-to-site peer l2tpvpn.sn1.mesh.nycmesh.net authentication mode pre-shared-secret

set vpn ipsec site-to-site peer l2tpvpn.sn1.mesh.nycmesh.net authentication pre-shared-secret nycmeshnet

set vpn ipsec site-to-site peer l2tpvpn.sn1.mesh.nycmesh.net local-address default

set vpn ipsec site-to-site peer l2tpvpn.sn1.mesh.nycmesh.net ike-group NYC_MESH

set vpn ipsec site-to-site peer l2tpvpn.sn1.mesh.nycmesh.net tunnel 1 esp-group NYC_MESH

set vpn ipsec site-to-site peer l2tpvpn.sn1.mesh.nycmesh.net tunnel 1 local port l2tp

set vpn ipsec site-to-site peer l2tpvpn.sn1.mesh.nycmesh.net tunnel 1 protocol udp

set vpn ipsec site-to-site peer l2tpvpn.sn1.mesh.nycmesh.net tunnel 1 remote port l2tp

Here's what your final configuration should look like. You can view it with show interfaces l2tp-client and show vpn. There are more settings here than you typed in above. That's ok. The additional settings are part of the default L2TP/IPsec config.

interfaces {

l2tp-client l2tpc0 {

authentication {

password 'your personal password'

user-id your personal user name'

}

default-route auto

description "NYC Mesh VPN (SN1)"

mtu 1400

name-server auto

require-ipsec

server-ip l2tpvpn.sn1.mesh.nycmesh.net

}

}

vpn {

ipsec {

allow-access-to-local-interface disable

auto-firewall-nat-exclude disable

esp-group NYC_MESH {

compression disable

lifetime 3600

mode transport

pfs disable

proposal 1 {

encryption aes256

hash sha1

}

}

ike-group NYC_MESH {

dead-peer-detection {

action restart

interval 30

timeout 120

}

ikev2-reauth no

key-exchange ikev1

lifetime 28800

proposal 1 {

dh-group 2

encryption aes256

hash sha1

}

}

site-to-site {

peer l2tpvpn.sn1.mesh.nycmesh.net {

authentication {

mode pre-shared-secret

pre-shared-secret nycmeshnet

}

connection-type initiate

description "NYC Mesh VPN (SN1)"

ike-group NYC_MESH

ikev2-reauth inherit

local-address default

tunnel 1 {

allow-nat-networks disable

allow-public-networks disable

esp-group NYC_MESH

local {

port l2tp

}

protocol udp

remote {

port l2tp

}

}

}

}

}

}

PPP configuration workaround

There is a bug in EdgeOS's PPP configuration that prevents EdgeRouter from connecting to the NYC Mesh VPN. Before you commit your VPN configuration, add the following scripts to your EdgeOS device:

The first script is located at /config/scripts/post-config.d/post-commit-hooks.sh. It's a helper that lets you run scripts every time you commit a new configuration.

#!/bin/sh

set -e

if [ ! -d /config/scripts/post-commit.d ]; then

mkdir -p /config/scripts/post-commit.d

fi

if [ ! -L /etc/commit/post-hooks.d/post-commit-hooks.sh ]; then

sudo ln -fs /config/scripts/post-config.d/post-commit-hooks.sh /etc/commit/post-hooks.d

fi

run-parts --report --regex '^[a-zA-Z0-9._-]+$' /config/scripts/post-commit.d

Make it executable and then run it:

chmod +x /config/scripts/post-config.d/post-commit-hooks.sh

/config/scripts/post-config.d/post-commit-hooks.sh

The second script fixes the PPP configuration for your L2TP tunnel so that you can successfully connect. It is located at /config/scripts/post-commit.d/fixup-l2tpc0.sh. Note: this is a different directory from the previous script.

#!/bin/bash

set -e

DEVICE=l2tpc0

CONFIG=/etc/ppp/peers/$DEVICE

if [ ! -f $CONFIG ]; then

exit

fi

if grep ^remotename $CONFIG > /dev/null; then

exit

fi

echo "remotename xl2tpd" | sudo tee -a $CONFIG > /dev/null

Make it executable:

chmod +x /config/scripts/post-commit.d/fixup-l2tpc0.sh

MTU workaround

Additionally, EdgeOS has a bug where pppd fails to correctly set the MTU of the L2TP interface. This is a problem if you plan to use OSPF over the VPN because OSPF requires that both peers agree on an MTU.

This script, located at /config/scripts/ppp/ip-up.d/l2tp-fix-mtu works around this issue by manually setting the MTU after the connection comes up.

#!/bin/sh

set -e

MTU=$(grep mtu /etc/ppp/peers/$PPP_IFACE | awk '{ print $2 }')

if echo $PPP_IFACE | grep -Eq ^l2tpc[0-9]+; then

ip link set dev $PPP_IFACE mtu $MTU

fi

Make it executable, and commit your configuration:

chmod +x /config/scripts/ppp/ip-up.d/l2tp-fix-mtu

commit

If all goes well, you should be connected to the VPN and be able to reach the other end of the tunnel:

ubnt@edgerouter# run show interfaces l2tp-client

Codes: S - State, L - Link, u - Up, D - Down, A - Admin Down

Interface IP Address S/L Description

--------- ---------- --- -----------

l2tpc0 10.70.72.68 u/u NYC Mesh VPN (SN1)

ubnt@edgerouter# ping 10.70.72.1

PING 10.70.72.1 (10.70.72.1) 56(84) bytes of data.

64 bytes from 10.70.72.1: icmp_seq=1 ttl=64 time=6.15 ms

64 bytes from 10.70.72.1: icmp_seq=2 ttl=64 time=6.42 ms

64 bytes from 10.70.72.1: icmp_seq=3 ttl=64 time=4.98 ms

^C

--- 10.70.72.1 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2007ms

rtt min/avg/max/mdev = 4.985/5.854/6.424/0.627 ms

Additionally, the MTU of your L2TP interface should be correctly set to 1400:

ubnt@edgerouter# ip link show l2tpc0

34: l2tpc0: <POINTOPOINT,MULTICAST,NOARP,UP,LOWER_UP> mtu 1400 qdisc pfifo_fast state UNKNOWN mode DEFAULT group default qlen 100

link/ppp

Save your active configuration to the startup configuration so that your tunnel will still be there when you reboot, and exit configuration mode:

save

exit

Reaching the mesh through the VPN

So far, your EdgeRouter can reach the VPN server at the other end of the tunnel, but you can't reach any of the other devices on the mesh (try pinging 10.10.10.10; you shouldn't be able to reach it). You can fix this by OSPF peering or by adding a static route. A static route is easiest.

In configuration mode, enter the following, and commit it:

set protocols static interface-route 10.0.0.0/8 next-hop-interface l2tpc0 description "NYC Mesh"

In this configuration, your EdgeRouter will route traffic destined for the mesh's private IP network through the VPN and all your other traffic over your primary internet connection – this is sometimes called split VPN. If you use addresses from 10.0.0.0/8 for your LAN that overlap with addresses used by the mesh, the addresses on your LAN will take precedence and you will not be able to access those parts of the mesh.

Once you've installed the static route, you should be able to reach the rest of the mesh:

ubnt@edgerouter# ping 10.10.10.10

PING 10.10.10.10 (10.10.10.10) 56(84) bytes of data.

64 bytes from 10.10.10.10: icmp_seq=1 ttl=63 time=4.85 ms

64 bytes from 10.10.10.10: icmp_seq=2 ttl=63 time=4.28 ms

64 bytes from 10.10.10.10: icmp_seq=3 ttl=63 time=7.08 ms

^C

--- 10.10.10.10 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2006ms

rtt min/avg/max/mdev = 4.286/5.408/7.082/1.207 ms

Remember to save your configuration to the startup config once it's working.

Using NAT to reach the mesh from a device on your network

You can now reach the mesh from your EdgeRouter, but you can't reach it from a device on your LAN like your laptop. The easiest way to do this is to use NAT:

set service nat rule 6000 outbound-interface l2tpc0

set service nat rule 6000 type masquerade

set service nat rule 6000 description "masquerade for NYC Mesh (SN1)"

Commit your config, and verify that you can reach the mesh from your laptop:

me@laptop$ ping 10.10.10.10

PING 10.10.10.10 (10.10.10.10): 56 data bytes

64 bytes from 10.10.10.10: icmp_seq=0 ttl=62 time=13.572 ms

64 bytes from 10.10.10.10: icmp_seq=1 ttl=62 time=10.603 ms

64 bytes from 10.10.10.10: icmp_seq=2 ttl=62 time=16.394 ms

^C

--- 10.10.10.10 ping statistics ---

3 packets transmitted, 3 packets received, 0.0% packet loss

round-trip min/avg/max/stddev = 10.603/13.523/16.394/2.364 ms

Once your configuration is working, save it to your startup config.

If you can ping 10.10.10.10 from the router, but not other devices on your LAN, you may need to reboot the router to clear the route cache.

Configuring .mesh DNS lookup

To use the .mesh top level domain to reach mesh services, you will need to change the DNS configuration on your EdgeRouter. The simplest way to do this is to configure your router's DHCP server to tell clients to use the mesh's recursive resolver (10.10.10.10) as their DNS server. But this causes a problem with our split VPN config: if your VPN connection goes down, you won't be able to resolve domain names, even if you're still connected to the public internet.

To fix this, you can configure EdgeOS's DNS forwarder to use the mesh's authoritative name server (10.10.10.11) for the .mesh TLD:

set service dns forwarding options server=/mesh/10.10.10.11

Additionally, you can configure the DNS forwarder to use the mesh's name server for reverse DNS lookups on 10.0.0.0/8:

set service dns forwarding options rev-server=10.0.0.0/8,10.10.10.11

Make sure to configure the DHCP server to provide your router's LAN address as the recursive DNS resolver.

To be able to reach the .mesh TLD while SSH'd into your EdgeRouter, configure your EdgeRouter to use its local DNS forwarder as its primary DNS server:

set system name-server 127.0.0.1

VPN - L2TP/IPsec Request

NYC Mesh maintains some common VPN infrastructure for use by active mesh members.

Please feel free to use the VPNs. However, please note that NYC Mesh is not a commercial VPN provider or reseller, nor are we trying to achieve an Internet-based darknet. The VPN service is subject to change and/or breakage at any time. Do not rely on NYC Mesh’s VPN service as your primary or critical VPN provider. Also, as with all NYC Mesh resources, do not abuse the VPN service or the access it provides.

Request a vpn account

Please write to "vpn @ nycmesh.net" and provide the following information, the same you provided on the join request form, to register your install

-

First name:

-

Last name:

-

Phone number:

-

Location (street address, including zip code):

-

Email:

-

Active Install number:

-

Comment:*

*please indicate if it is to be used by you, from your laptop, phone, etc.. Or if it is to be setup on your node, such as for a "remote node" or else.

VPN - WireGuard

WireGuard is a new, simple, and fast VPN implementation and protocol. For comparison, the older L2TP/IPsec VPNs typically will achieve about 100Mbps, but WireGuard VPNs may reach speeds upward of 300-400Mbps on the same hardware, or higher on a high-end workstation.

In addition to its speed, WireGuard has some great features such as built-in roaming (a single encrypted packet moves the tunnel to your new IP), cryptokey routing, and formal cryptographic verification.

On the other hand, it also has some challenges, such as pre-key exchange and a lack of automatic address assignment. Both of these problems require manual configuration on both ends of the tunnel. Cryptokey routing also presents its own challenges in some situations.